Bertrand & Mullainathan (2004, .htm) is one of the best known and most cited American Economic Review (AER) papers [1]. It reports a field experiment in which resumes given typically Black names (e.g., Jamal and Lakisha) received fewer callbacks than those given typically White names (e.g., Greg and Emily). This finding is interpreted as evidence of racial discrimination in labor markets .

Deming, Yuchtman, Abulafi, Goldin, and Katz (2016, .htm) published a paper in the most recent issue of AER. It also reports an audit study in which resumes were sent to recruiters. While its goal was to estimate the impact of for-profit degrees, they did also manipulate race of job applicants via names. They did not replicate the (secondary to them) famous finding that at least three other studies had replicated (.ssrn | .pdf | .htm). Why? What was different in this replication? [2]

Small Telescope Test

The new study had more than twice the sample size (N = 10,484) of the old study (N = 4,870). But it also included Hispanic names, the sample for White and Black names was nevertheless about 50% larger than for the old study.

Callback rates in the two studies were (coincidentally) almost identical: 8.2% in both. In the famous study, Whites were 3% percentage points (pp) more likely to receive a call-back than non-Whites. In the new study they were 0.4% pp less likely than non-Whites, not significantly different from 0%, χ2(1)=.61, p=.44 [3].

The small-telescopes test (SSRN) asks if a replication result rules out effects big enough to be detectable by the original. The confidence interval around the new estimate spans -1.3% pp against Whites to +0.5% pp pro-White. For the original sample size, an effect as small as that upper end, +.5% pp, would lead to a meager 9% of statistical power. The new study's results are inconsistent with effects big enough to be detectable by the older study, so we accept the null. (For more on accepting the null: DataColada[42].)

Why no effect of race?

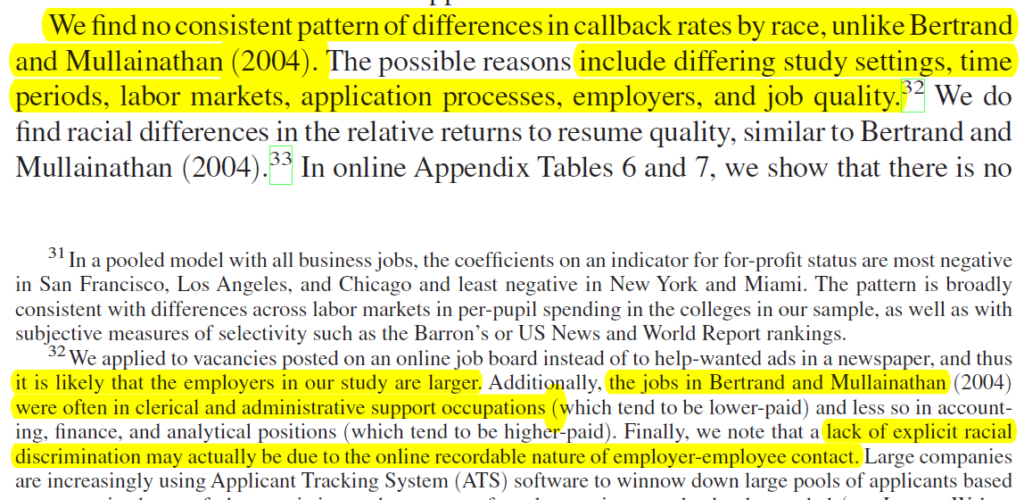

Deming et al. list a few differences between their study and Bertrand & Mullainathan's, but do not empirically explore their role [4]:

In addition to these differences, the new study used a different set of names. They are not listed in paper, but the first author quickly provided them upon request.

That names thing is key for this post.

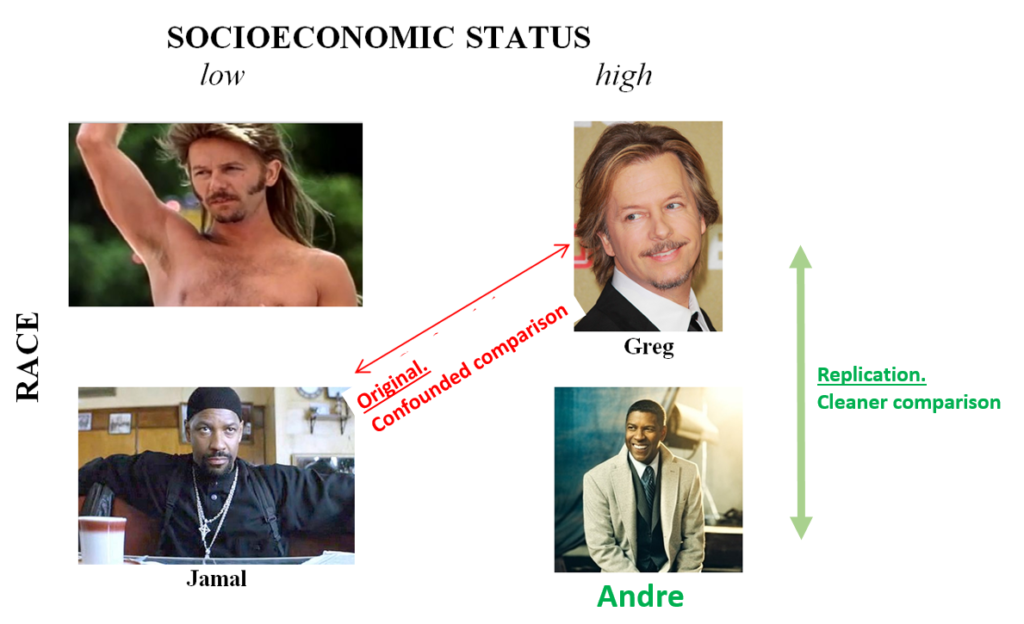

In DataColada[36], I wrote about a possible Socioeconomic Status (SES) confound in Bertrand and Mullainathan (2004) [5]. Namely, their Black names (e.g., Rasheed, Leroy, Jamal) seem low SES, while the White names (e.g., Greg, Matthew, Todd) do not. The Black names from the new study (e.g., Malik, Darius and Andre) do not seem to share this confound. Below is the picture I used to summarize Colada[36], I modified it (with green font) to capture why I am interested in the new study's Black names [6].

But I am a Chilean Jew with a PhD, my own views on whether a particular name hints at SES may not be terribly representative of that of an everyday American. So I collected some data.

Study 1

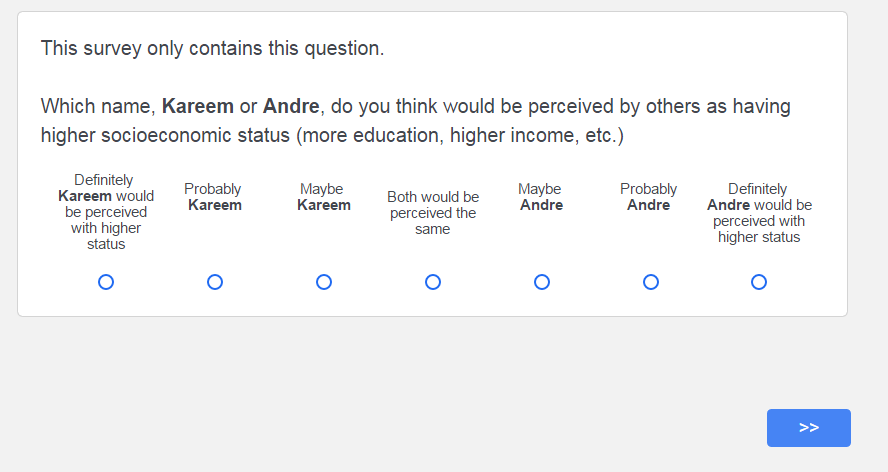

In this study I randomly paired a Black name used in the old study with a Black name used in the new study and N=202 MTurkers answered this question (Qualtrics survey file and data: .htm):

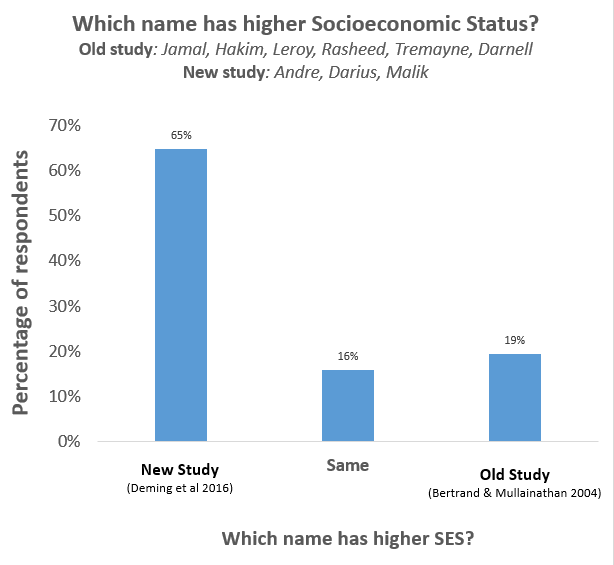

Each respondent saw one random pair of names, in counterbalanced order. The results were more striking than I expected [7].

Each respondent saw one random pair of names, in counterbalanced order. The results were more striking than I expected [7].

Note: Tyrone was used in both studies so I did not include it.

Study 2

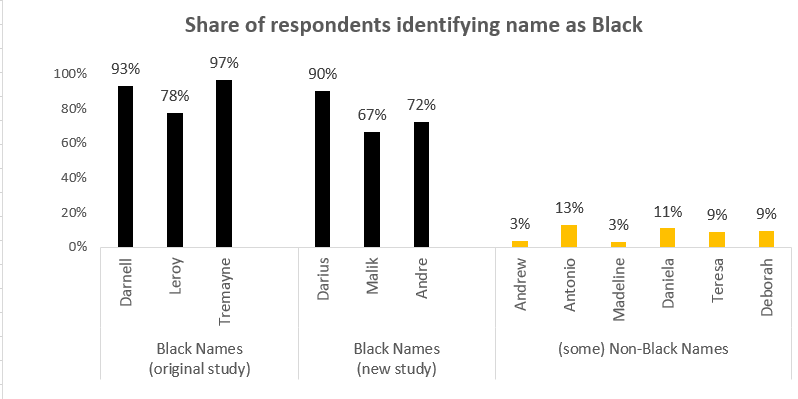

One possible explanation for Study 1’s result (and the failure to replicate in the recent AER article) is that the names used in the new study were not perceived to be Black names. In Study 2, I asked N=201 MTurkers to determine the race and gender of some of the names from the old and new studies [8].

Indeed the Black names in the new study were perceived as somewhat less Black than those used in the old one. Nevertheless, they were vastly more likely to be perceived as Black than were the control names. Based on this figure alone, we would not expect discrimination against Black names to disappear in the new study. But it did.

In Sum.

The lower callback rates for Jamal and Lakisha in the classic 2004 AER paper, and the successful replications mentioned earlier, are as consistent with racial as with SES discrimination. The SES account parsimoniously also explains this one failure to replicate the effect. But this conclusion is tentative as best, we are comparing studies that differ on many dimensions (and the new study had some noteworthy glitches, read footnote 4). To test racial discrimination in particular, and name effects in general, we need the same study to orthogonally manipulate these, or at least use names pretested to differ only on the dimension of interest. I don't think any audit study has done that.

![]()

Author feedback.

Our policy is to contact authors whose work we discuss to request feedback and give an opportunity to respond within our original post. I contacted the 7 authors behind the two articles and received feedback from 5 of them. I hope to have successfully incorporated their suggestions; they focused on clearer use of "replication" terminology, and broader representation of the relevant literature.

Footnotes.

- According to Web-of-science (WOS), its 682 citations put it as the 9th most cited article published since 2000. Aside: The WOS should report an age-adjusted citation index. [↩]

- For a review of field experiments on discrimination see Bertrand & Duflo (.htm) [↩]

- I carried out these calculations using the posted data (.dta | .csv) . The new study reports regression results with multiple covariates and on subsamples. In particular, in Tables 3 and 4 they report results separately for jobs that do and do not require college degrees. For jobs without required degrees, White males did significantly worse: they were 4% percentage points (pp) less likely to be called back. For jobs that do require a college degree the point estimate is a -1.5% pp effect (still anti-White bias), but with a confidence interval that includes +2.5% pp (pro-White), in the ball-park of the 3% pp of the famous study, and large enough to be detectable with its sample size. So for jobs without requirements a conclusive failure to replicate, for those that require college, an inconclusive result. The famous study investigated jobs that did not require a college degree. [↩]

- Deming et al surprisingly do not break down results for Hispanics vs Blacks in their article; I emailed the first author and he explained that, due to a glitch in their computer program, they could not differentiate Hispanic from Black resumes. This is not mentioned in their paper. This glitch generates another possible explanation: Blacks may have been discriminated against just like in the original, but Hispanics were discriminated in favor by a larger amount, so that when collapsed into a single group, the negative effect is not observable. [↩]

- Fryer & Levitt (2004) have a QJE paper on the origin and consequences of distinctively Black names (.htm) [↩]

- The opening section mentions three articles that replicate Bertrand and Mullainathan's finding of Black names receiving fewer callbacks. The Black names in those papers also seem low SES to me but I did not include them in my data-collection. For instance, the names include Latoya, Tanisha, DeAndre, DeShawn, and Reginald. They do not include any of the high SES Black names from Deming et al. [↩]

- It would be most useful to compare SES between White and Black names, but I did not include White names in this study worried respondents would think "I am not going to tell you that I think White names are higher SES than Black names." Possibly my favorite Mike Norton study (.htm) documents people are OK making judgments between two White or two Black faces, but reluctant between a White and a Black one [↩]

- From Bertrand & Mullainathan I chose 3 Black names, the one their participants rated the Blackest, the median, and the least Black; see their Table A1. [↩]