We have argued that, for most effects, it is impossible to identify the average effect (datacolada.org/33). The argument is subtle (but not statistical), and given the number of well-informed people who seem to disagree, perhaps we are simply wrong. This is my effort to explain why we think identifying the average effect is so hard. I am going to take a while to explain my perspective, but the boxed-text below highlights where I am eventually going.

When averaging is easy: Height at Berkeley.

First, let’s start with a domain where averaging is familiar, useful, and plausible. If I want to know the average height of a UC Berkeley student I merely need a random sample, and I can compute the average and have a good estimate. Good stuff.

My sense is that when people think that we should calculate the average effect size they are picturing something kind of like calculating average height: First sample (by collecting the studies that were run), then calculate (by performing a meta-analysis). When it comes to averaging effect sizes, I don’t think we can do anything particularly close to computing the "average" effect.

The effect of happiness on helpfulness is not like height

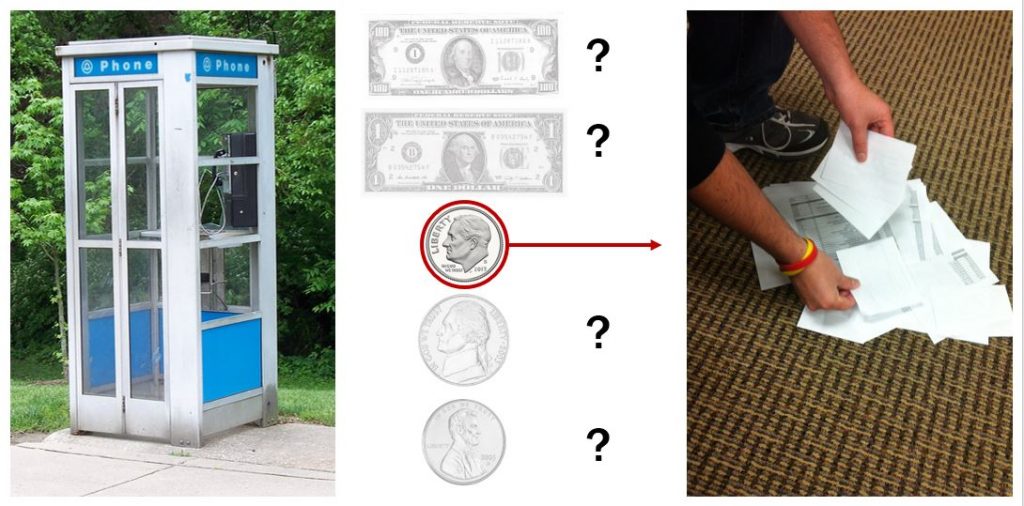

Let’s consider an actual effect size from psychology: the influence of positive emotion on helping behavior. The original paper studying this effect (or the first that I think of) manipulates whether or not a person unexpectedly finds a dime in a phone booth and then measures whether the person stops to help pick up some spilled papers (.htm). When people have the $.10 windfall they help 88% of the time, whereas the others help only 4% of the time[1]. So that is the starting point, but it is only one study. The same paper, for example, contains another study manipulating whether people received a cookie and measures minutes volunteered to be a confederate for either a helping experiment, in one condition, or a distraction experiment, in another (a 2 x 2 design). Cookies increased minutes volunteered for helping (69 minutes vs. 16.7 minutes) and decreased minutes volunteered for the distraction experiment (20 minutes vs. 78.6 minutes) [2]. OK, so the meta-analyst can now average those effect sizes in some manner and conclude that they have identified an unbiased estimate of the average effect of positive emotion on helping behavior.

What about the effect of nickels on helpfulness?

However, that is surely not right, because those are not the only two studies investigating the effect of happiness on helpfulness. Publication bias is the main topic discussed by meta-analytic tool developers. Perhaps, for example, there was an unreported study using nickels, rather than dimes, that did not get to p<.05. Researchers are more likely to tell you about a result, and journal editors are more likely to publish a result, if it is statistically significant. There have been lots of efforts to find a way to correct for it, including p-curve. But what exactly are those aiming to correct? What is the right set of studies to attempt to reconstruct?

The studies we see versus the studies we might see

Because we developed p-curve, we know which answer it is aiming for: The true average effect of the studies it includes [3]. So it gives an unbiased estimate of the dimes and cookies, but is indifferent to nickels. We are pretty comfortable owning that limitation – p-curve can only tell you about the true effect of the studies it includes. One could reasonably say at this point, “but wait, I am looking for the average effect of happiness on helping, so I want my average to include nickels as well.” This gets to the next point: What are the other studies that should be included?

Let’s assume that there really is a non-significant (p>.05) nickels study that was conducted. Would we find out about it? Sometimes. Perhaps the p-value is really close to .05, so the authors are comfortable reporting it in the paper? [4] Perhaps it creeps into a book chapter some time later and the p-values are not so closely scrutinized? Perhaps the experimenter is a heavy open-science advocate and writes a Python script that automatically posts all JASP output on PsyArXiv regardless of what it is? The problem is not whether we will see any non-significant findings, the problem is whether we would see all of them. No one believes that we would catch all of them, and presumably everyone believes that we would see a biased sample – namely, we would be more likely to see those studies which best serve the argument of the people presenting them. But we know very little about the specifics of that biasing. How likely are we to see a p = .06? Does it matter if that study is about nickels, helping behavior, or social psychology, or are non-significant findings more or less likely to be reported in different research areas? Those aren’t whimsical questions either, because an unknown filter is impossible to correct for. Remember the averaging problem at the beginning of this post – the average height of students at UC Berkeley – and think of how essential the sampling was for that exercise. If someone said that they averaged all the student heights in their Advanced Dutch Literature class we would be concerned that the sample was not random, and since it likely has more Dutch people (who are peculiarly tall), we would worry about bias. But how biased? We have no idea. The same goes for the likelihood of seeing a non-significant nickels study. We know that we are less likely to see it, but we don’t know how much less likely [5]. It is really hard to integrate these into a true average.

But ok, what if we did see every single conducted study?

What if we did know the exact size of that bias? First: wow. Second, that wouldn’t be the only bias that affects the average, and it wouldn’t be the largest. The biggest bias is almost certainly in what studies researchers choose to conduct. Think back to the researchers choosing to use a dime in a phone booth. What if they had decided instead to measure helping behavior differently? Rather than seeing if people picked up papers, they instead observed whether people chose to spend the weekend cleaning the experimenter’s septic tank. That would still be helpful, so the true effect of such a study would indisputably be part of the true average effect of happiness on helping. But the researchers didn’t use that measure, perhaps because they were concerned that the effect would not be large enough to detect. Also, the researchers did not choose to manipulate happiness by leaving a briefcase of $100,000 in the phone booth. Not only would that be impractical, but that study is less likely to be conducted because it is not as compelling: the expected effect seems too obvious. It is not particularly exciting to say that people are more helpful when they are happy, but it is particularly exciting to show that a dime generates enough happiness to change helpfulness [6]. So the experiments people conduct are a tiny subset of the true effect, they are a biased set (no one randomly generates an experimental design, nor should they), and those biases are entirely opaque. But if you want a true average, you need to know the exact magnitude of those biases.

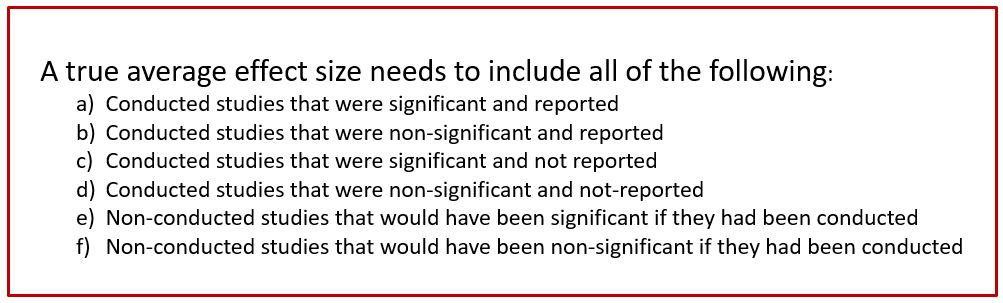

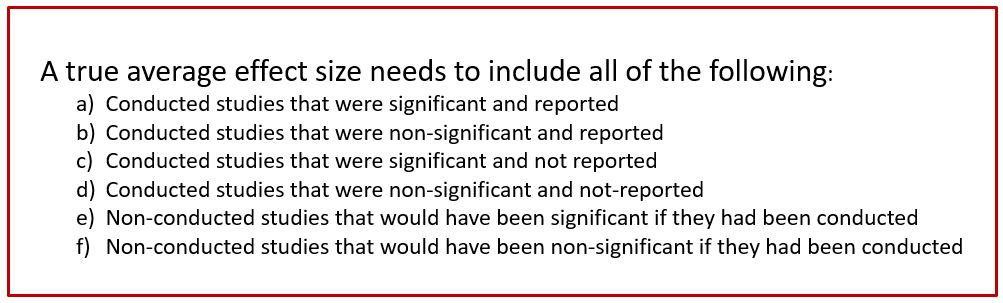

So what all is included in an average effect size?

So now I return to that initial list of things that need to be included in the average effect size (reposted right here to avoid unnecessary scrolling):

That is a tall order. I don’t mind someone wanting that answer, and I fully acknowledge that p-curve does not deliver it. P-curve only hopes to deliver the average effect in (a).

That is a tall order. I don’t mind someone wanting that answer, and I fully acknowledge that p-curve does not deliver it. P-curve only hopes to deliver the average effect in (a).

If you want the “Big Average” effect (a, b, c, d, e, and f) then you need to clarify that you have access to the population or can perfectly estimate the biases that influence the size of each category. That is not me being dismissive or dissuasive, it is just the nature of averaging. We are so pessimistic about calculating that average effect size that we use the shorthand of saying that the average effect size does not exist.[7]

But that is a statement of the problem and an acknowledgment of our limitations. If someone has a way to handle the complications above, they would have at least three very vocal advocates.

![]()

- ! [↩]

- !! [↩]

- “True effect” is kind of conceptual, but in this case I think that there is some agreement on the operational definition of “true.” If you conducted the study again, you would expect, on average, the “true” result. So if, because of bias or error, the published cookie effect is unusually smaller or larger than the true underlying effect, you are still most interested in the best prediction of what would happen if you ran the study again. I am open to being convinced that there is a different definition of “true”, but I think this is a pretty uncontroversial one. [↩]

- Actually, it is worth noting that the cookie experiment features one critical test with a t-value of 1.96. Given the implied df for that study, the p-value would be >.05, though it is reported as p<.05. The point is, those authors were willing to report a non-significant p-value. [↩]

- Scientists, statisticians, psychologists, and probably postal workers, bobsledders, and pet hamsters have frequently bemoaned the absurdity of a hard cut-off of p<.05. Granted. But it does provide a side benefit for this selection-bias issue: If p>05, we have no idea whether we will see it, but if p<.05, we know that the p-value hasn’t kept us from seeing it. [↩]

- Or to quote the wonderful Prentice and Miller (1992), who in describing the cookie finding, say “the power of this demonstration derives in large part from the subtlety of the instigating stimulus… although mood effects might be interesting however heavy-handed the manipulation that produced them, the cookie study was perhaps made more interesting by its reliance on the minimalist approach.” p. 161. [↩]

- It is worth noting that there is some variation between the three of us on the impracticality of calculating the average effect size. The most optimistic of us (me, probably) believe that under a very small number of circumstances – none of which are likely to happen for psychological research – the situation might be well-defined enough for the average effect to be understood and calculated. The most pessimistic of us think even that limited set of circumstances are essentially a non-existent set. From that perspective, the average effect truly does not exist. [↩]