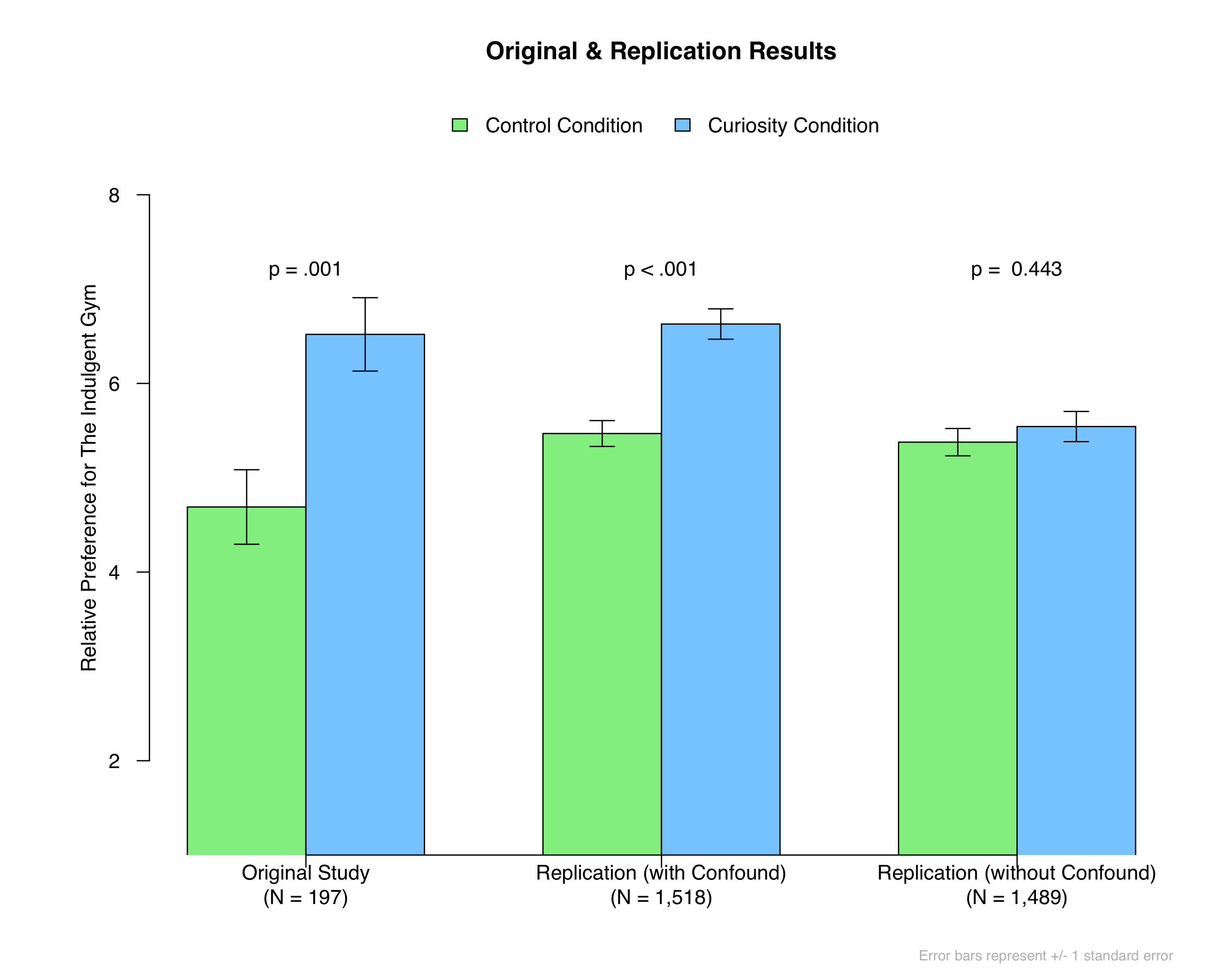

In this installment of Data Replicada, we report on Study 3 of a recently published Journal of Consumer Research article entitled, “Does Curiosity Tempt Indulgence?” (.htm). In that study, participants were induced to feel curious or not and then were asked to (hypothetically) choose between two gym memberships, one for a “normal” gym and one for an “indulgent” gym (on a 9-point scale). The authors reported that those induced to feel curious were more likely to choose the indulgent gym.

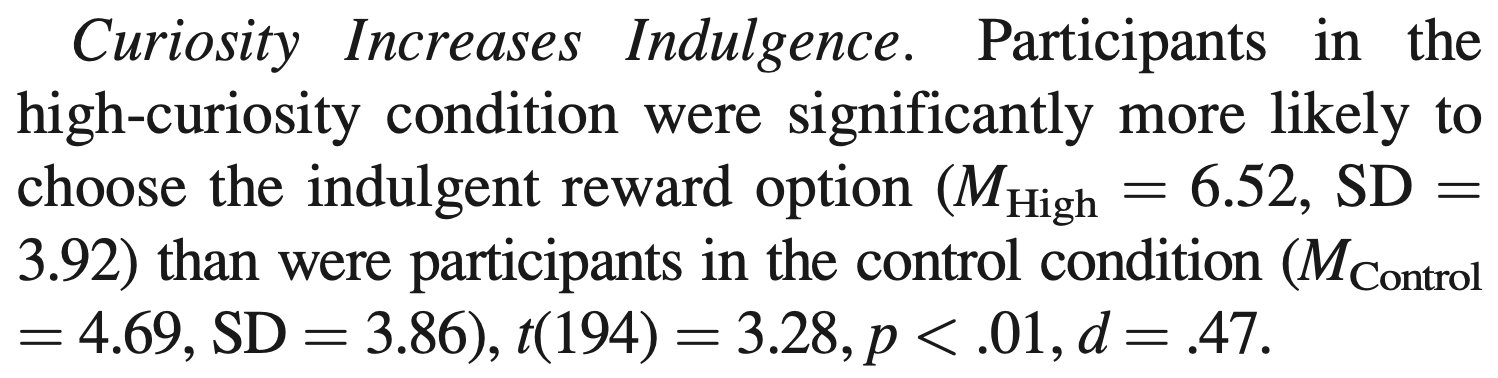

On its own, this result seems in keeping with many others. Many articles report evidence for these kinds of spillover effects, whereby a feeling induced by one task is purported to influence some unrelated task. But we were surprised by the magnitude and significance of the effect:

In our experience, spillover effects are not this big, and only rarely are they this significant, particularly with a modest sample size of about 200 people. So we were interested in trying to replicate it.

When we reached out to the authors for their materials, we learned that the second author had run this study by himself. After a bit of back and forth, he was able to send us the original Qualtrics file used to run the study. He also provided us with other information that we needed to run a replication. We are very appreciative of that.

A Hidden Confound

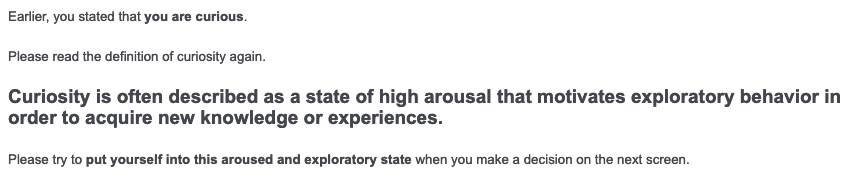

A possible explanation for the large effect emerged in the specifics of the Qualtrics file. It appeared that there might be a consequential confound. Our reading of the original article led us to the erroneous belief that the induction task and the gym-choice task were presented as separate, unrelated tasks within the survey [1]. In truth, though, not only were the tasks not presented as separate, but rather participants were reminded of the previous emotion induction, and specifically asked to make a decision consistent with it. In the Curiosity condition, this instruction read:

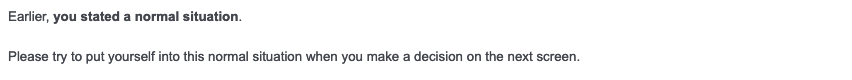

In the Control condition, it read:

So, relative to the Control condition, participants in the Curiosity condition were told to put themselves in a state of mind to “acquire new knowledge or experiences” and to express that state in the subsequent decision. That subsequent decision presented participants with a choice between a familiar gym and an "indulgent" gym that was unusual in a number of ways (e.g., it included a “negative-edge pool” and a “Scandinavian wood sauna”). We were concerned that the combination of instructions and stimuli could offer an alternative explanation: people in the curiosity condition weren’t choosing the indulgent gym because of the spillover effects from momentarily induced curiosity, but rather because they were told that they should make a choice consistent with wanting to try new experiences.

But of course, the only way to know whether the hidden confound was responsible for the published result was to test it. And so that’s what we did.

The Study

In this preregistered study (https://aspredicted.org/mz4h8.pdf), we used the same survey as in the original study, with two additional conditions that we added to identify the possible operation of an instructional confound [2]. You can access our Qualtrics survey here (.qsf), our materials here (.pdf), our data here (.csv), our codebook here (.xlsx), and our R code here (.R).

We randomly assigned 3,007 MTurkers to one of four conditions in a 2 (curiosity vs. control) x 2 (original instructions vs. confound-free instructions) between-subjects design [3]. Approximately half of the participants read instructions identical to the original, as described above. For the remaining participants we removed those instructions from the survey so as to eliminate the potential confound.

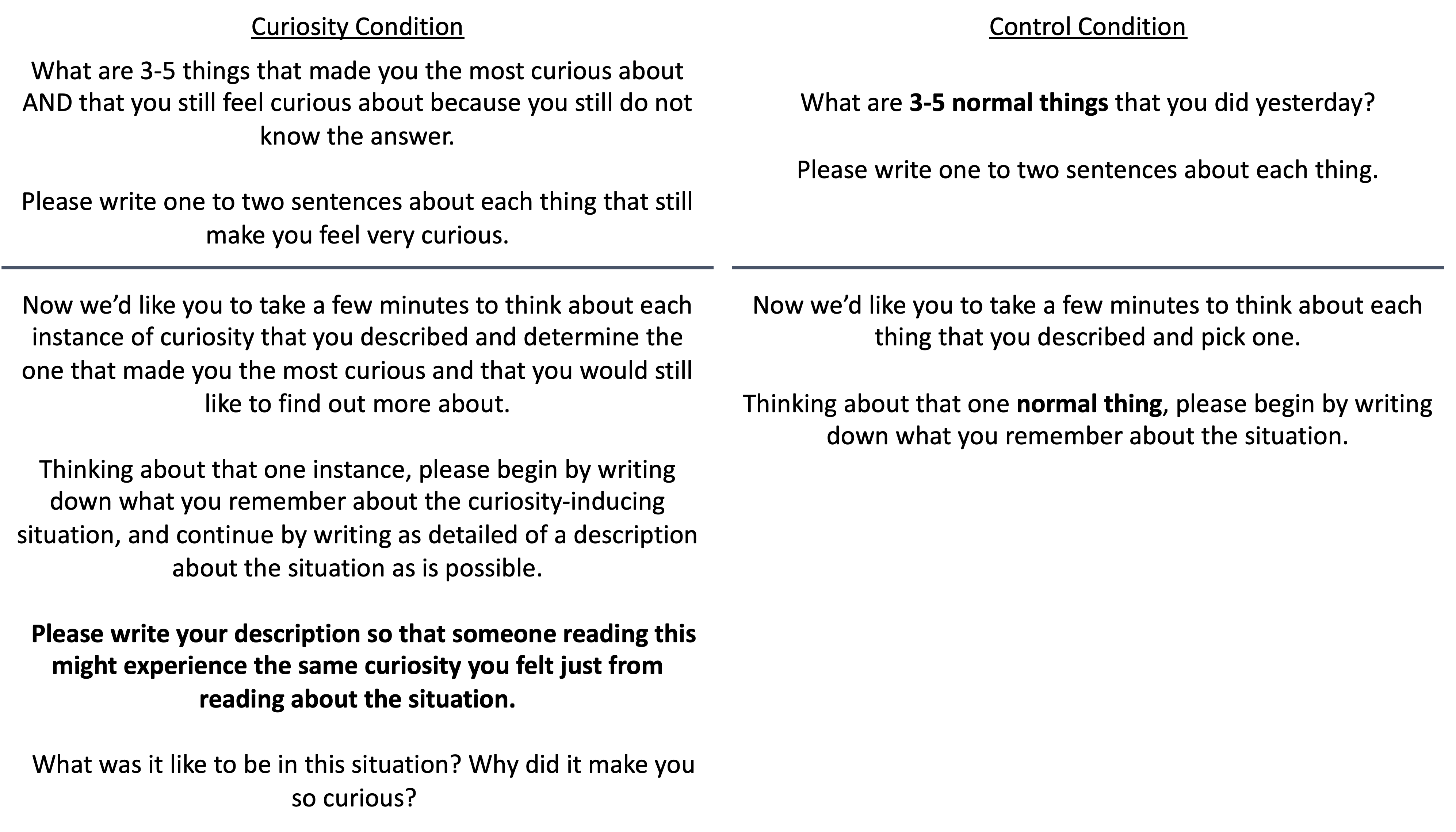

As in the original, our replication experiment began by telling participants that the study was about credit card rewards, but that they would first be asked to complete a writing task. This writing task served as the emotion induction. The task required participants to answer two questions, presented on two different screens. Here were the instructions:

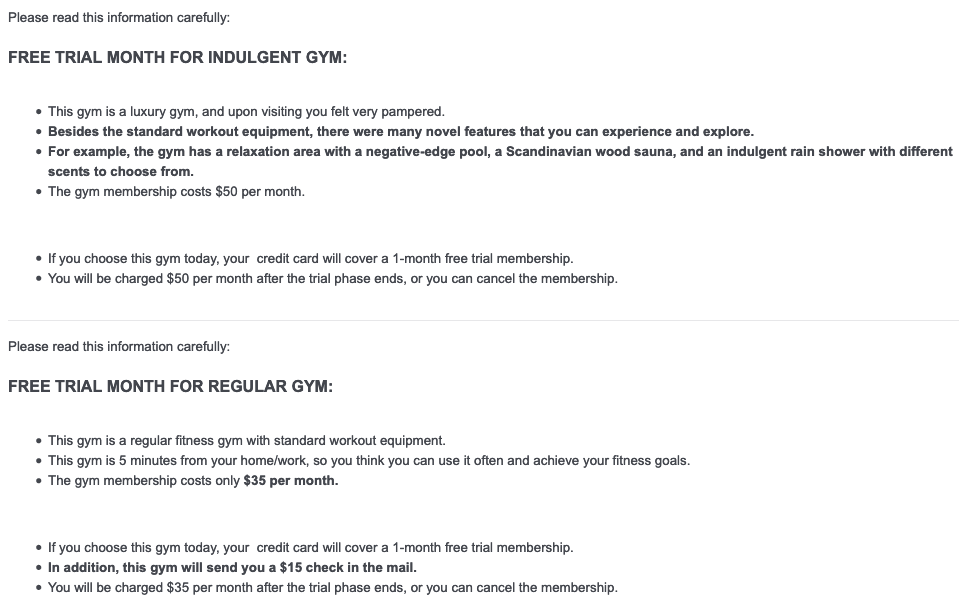

After that, participants completed the manipulation check. They then went on to imagine that their credit card was “offering you a gift card which is good for a 1-month membership at a gym in your area, valued at $50.” They were told that there were two gyms to choose from, one that is indulgent and one that is regular [4]:

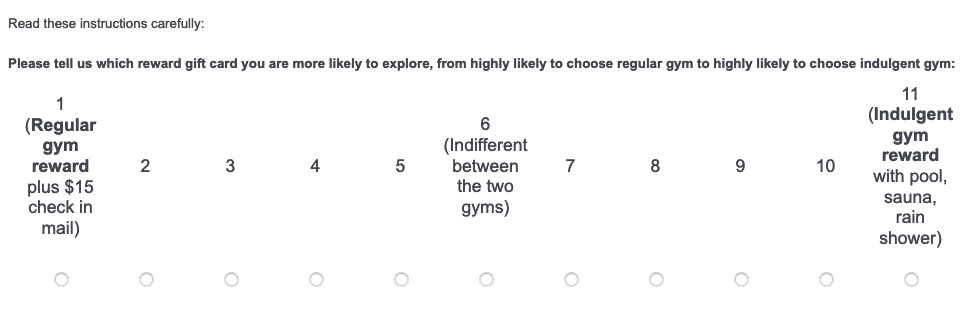

Then, in the “confounded instructions” condition, participants saw the instructions described above, which asked them to recall the writing task and, in the “curiosity” condition, to put themselves into an “exploratory state” when making their decision. In the “unconfounded instructions” condition, these instructions were replaced with, "Please make a decision on the next screen." Participants then responded to the key dependent variable, the gym choice:

Results

First, we wanted to test whether the curiosity manipulation was successful. It was. Participants reported greater curiosity in the Curiosity condition (M = 3.67, SD = 0.79) than in the Control condition (M = 3.18, SD = 0.82), F(1, 3004) = 281.42, p < 10-16. There was no Instruction condition main effect (p = .834) or Curiosity x Instruction interaction (p = .721).

Recall that in the original study, which contained the confounded instructions, participants in the Curiosity condition were more likely to choose the more unusual, indulgent gym. We replicated that result, but only when we administered the confounded instructions [5]:

It seems that curiosity does not tempt indulgence, at least not in a way that is detectable with 750 per cell. Rather, the authors’ original result was best explained by a confound. Furthermore, it was a confound that could only be identified in the original materials, and was not observable to reviewers and readers who were merely reading the final manuscript [6].

It seems that curiosity does not tempt indulgence, at least not in a way that is detectable with 750 per cell. Rather, the authors’ original result was best explained by a confound. Furthermore, it was a confound that could only be identified in the original materials, and was not observable to reviewers and readers who were merely reading the final manuscript [6].

Conclusion

Many contemporary methods courses teach something about open science and pre-registration, but all methods courses have always highlighted the lurking dangers of confounds. For that reason, their identification largely dominates comments in the review process and conversations in journal clubs. But those dialogues are only possible when the confounds are taken out of hiding and shared with all audiences. Ideally, every article will contain enough detail to differentiate confounded and unconfounded designs, but even if they are not, the posting of original materials (and data) will necessarily help. We hope that all journals, including the Journal of Consumer Research, will default to requiring authors to share their original materials. In the meantime, we recommend that reviewers ask for them to do so.

Author feedback

When we reached out to the authors for comments on the post, they responded as follows:

"We would like to thank you for your interest in our work and for examining it further.

We had made the requested materials and instructions available to you, based on which you conducted a replication. As summarized in your blog post, you found significant replication using our original design which featured an emotional-state reminder. Using your proposed method and design which excluded an emotional state reminder, such replication did not materialize. We find your results intriguing and hope to use these insights to inform future theorizing and empirical probes of curiosity specifically and emotion induction generally. Many thanks again for your interest in our work."

Footnotes.

- The authors wrote, “the state of unsatisfied curiosity translates to a desire to pursue unrelated rewards,” emphasis added. [↩]

- Except for our instruction manipulation, this study differed from the original in two ways. First, the full battery of manipulation check items used by the original authors was purchased from https://www.mindgarden.com/ and cost $2.50 per participant. We did not have the funds to use that same set of items, so we devised our own battery of manipulation check items. Specifically, we asked participants to indicate, on 5-point scales, “How curious/bored/eager do you feel right now?” We reverse-coded the “bored” item and averaged them together to create an index of curiosity. These items were similar to the items the authors used to assess curiosity. Second, to make the survey a manageable length and cost — we paid $1 per participant, whereas they paid $1.50 — we excluded the original authors’ measurement of “desire for rewards.” This measure came after all of the measures of interest here, and so excluding it had no bearing on the results. [↩]

- The original study had a sample size of 197, or ~99 per cell. In this replication, we were investigating an attenuated interaction hypothesis, which virtually always necessitates getting a very large sample (see Colada [17]). To make sure we were sufficiently powered, we decided to recruit 3,000 MTurkers, or 750 per cell. [↩]

- As in the original study, these descriptions were presented in a random order. [↩]

- Specifically, there were significant main effects of Curiosity condition, F(1, 3003) = 18.53, p < 10-4, and Instruction condition, F(1, 3003) = 12.55, p = .0004, that were qualified by a significant Curiosity x Instruction interaction, F(1, 3003) = 10.88, p = .001. [↩]

- There is one other detail of importance to report here: There seemed to be differential attrition, such that people assigned to the curiosity condition were more likely to drop out of the study than were participants assigned to the control condition. As a result, we wound up with more participants in the Control condition (n = 1,681) than in the Curiosity condition (n = 1,327). There is no way to know how much this affected the results, if at all. We also do not know whether this was a problem in the original study. All we know is that, because of this, random assignment was compromised to some extent. [↩]