On the last class of the semester I hold a “town-hall” meeting; an open discussion about how to improve the course (content, delivery, grading, etc). I follow-up with a required online poll to “vote” on proposed changes [1].

![]()

Grading in my class is old-school. Two tests, each 40%, homeworks 20% (graded mostly on a completion 1/0 scale). The downside of this model is that those who do poorly early on, get demotivated. Also, a bit of bad luck in a test hurts a lot. During the latest town-hall the idea of having multiple quizzes and dropping the worst was popular. One problem with this model is that students can blow off a quiz entirely. After the town-hall I thought of why students loved the drop-1 idea and whether I could capture the same psychological benefit with a smaller pedagogical loss.

I came up with TWARKing: assigning test weights after results are known [2]. With TWARKing, instead of each test counting 40% for every student, whichever test an individual student did better on, gets more weight; so Julie does better in Test 1 than Test 2, then Julie’s test 1 gets 45% and test 2 35%, but Jason did better in Test 2, so Jason’s test 2 gets 45%. [3]. Dropping a quiz becomes a special case of TWARKing: worst gets 0% weight.

It polls well

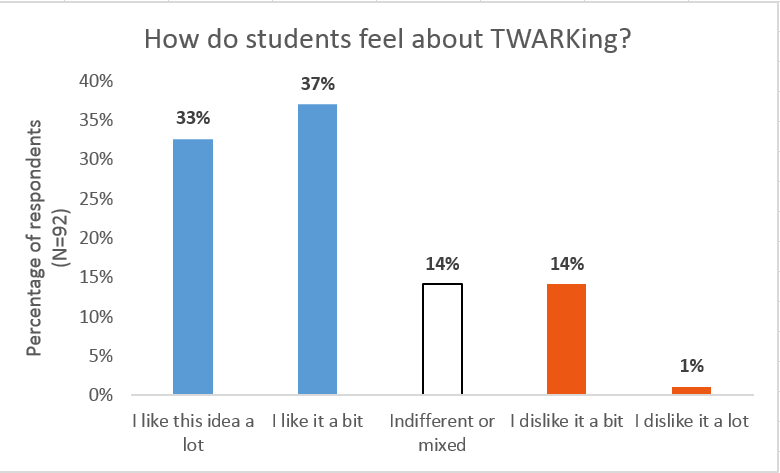

I expected TWARKing to do well in the online poll but was worried students would fall prey to competition-neglect, so I wrote a long question stacking the deck against TWARKing:

70% of student were in favor, only 15% against (N=92, only 3 students did not complete the poll).

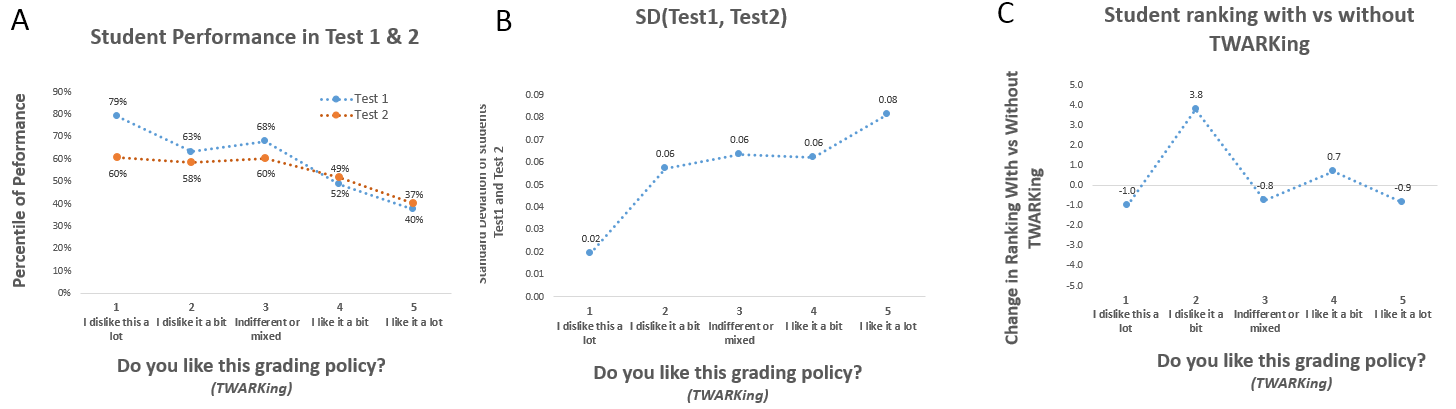

The poll is not anonymous, so I looked at how TWARKing attitudes are correlated with actual performance.

Panel A shows that students doing better like TWARking less, but the effect is not as strong as I would have expected. Students liking it 5/5 perform in the bottom 40%, those liking 2/5 are in the top 40%.

Panel B shows that students with more uneven performance do like the TWARKing more, but the effect is small and unimpressive (Spearman's r=.21:, p=.044).

For Panel C I recomputed the final grades had TWARKing been implemented for this semester and saw how the change in ranking correlated with support of TWARKing. It did not. Maybe it was asking too much for this to work as students did not yet know their Test 2 scores.

My read is that students cannot anticipate if it will help vs. hurt them, and they generally like it all the same.

TWARKing could be pedagogically superior.

Tests serve two main roles: motivating students and measuring performance. I think TWARKing could be better on both fronts.

Better measurement. My tests tend to include insight-type questions: students either nail them or fail them. It is hard to get lucky in my tests, I think, hard to get a high score despite not knowing the material. But, easy, unfortunately, to get unlucky; to get no points on a topic you had a decent understanding of [4]. Giving more weight to the highest test is hence giving more weight to the more accurate of the two tests. So it could improve the overall validity of the grade. A student who gets a 90 and a 70 is, I presume, better than one getting 80 in both tests.

This reminded me of what Shugan & Mitra (2009 .htm) label the “Anna Karenina effect” in their under-appreciated paper (11 Google cites). Their Anna Karenina effect (there are a few; each different from the other), occurs when less favorable outcomes carry less information than more favorable ones; for those situations, measures other than the average, e.g., the max, performs better for out-of-sample prediction. [5]

To get an intuition for this Anna Karenina effect: think about what contains more information, a marathon runner’s best vs worst running time? A researcher’s most vs least cited paper?

Note that one can TWARK within test, weighting the highest scored answer by each student more. I will.

Motivation. After doing very poorly in a test it must be very motivating to feel that if you study hard you can make this bad performance count less. I speculate that with TWARKing underperforming students in Test 1 are less likely to be demotivated for Test 2 (I will test this next semester, but without random assignment…). TWARKing has the magical psychological property that the gains are very concrete, every single student gets a higher average with TWARKing than without, and they see that; the losses, in contrast, are abstract and unverifiable (you don’t see the students who benefited more than you did, leading to a net-loss in ranking).

Bottom line

Students seem to really like TWARKing.

It may make things better for measurement.

It may improve motivation.

A free happiness boost.

![]()

Footnotes.

- Like Brexit, the poll in OID290 is not binding [↩]

- Obviously the name is inspired by ‘HARKing’: hypothesizing after results are known. The similarity to Twerking, in contrast, is unintentional, and, given the sincerity of the topic, probably unfortunate. [↩]

- I presume someone already does this , not claiming novelty [↩]

- Students can still get lucky if I happen to ask on a topic they prepared better for. [↩]

- They provide calibrations with real data in sports, academia and movie ratings. Check the paper out. [↩]