Metacritic.com scores and aggregates critics’ reviews of movies, music, and video games. The website provides a summary assessment of the critics’ evaluations, using a scale ranging from 0 to 100. Higher numbers mean that critics were more favorable.

In theory, this website is pretty awesome, seemingly leveraging the wisdom-of-crowds to give consumers the most reliable recommendations. After all, it’s surely better to know what a horde of reviewers thinks than to know what a single reviewer thinks.

But at least when it comes to music reviews, metacritic is broken. I’ll explain how/why it is broken, I’ll propose a way to fix it, and I’ll show that the fix works.

Metacritic Is Broken

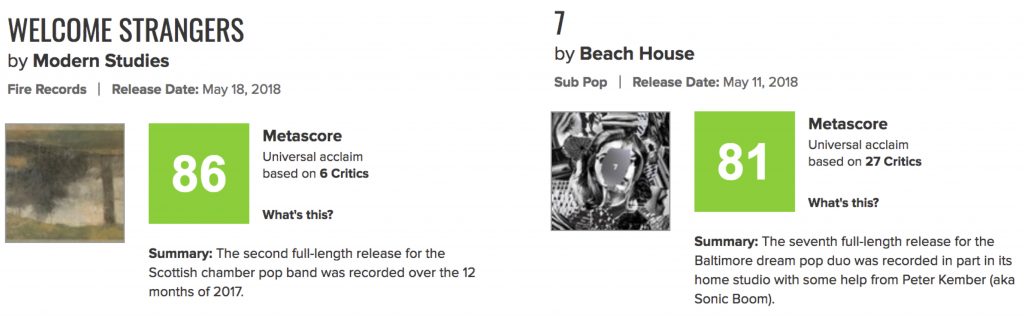

A few weeks ago, a fairly unknown “Scottish chamber pop band” named Modern Studies released an album that is not very good (Spotify .html). At about the same time, the nearly perfect band Beach House released a nearly perfect album (Spotify .html).

You might think these things are subjective, but in many cases they are really not. The Great Gatsby is objectively better than this blog post, and Beach House’s album is objectively better than Modern Studies’s album.

But what does Metacritic say?

So, yeah, metacritic is broken.

If this were a one-off example, I wouldn’t be writing this post. It is not a one-off example. For example, Metacritic would lead you to believe that Fever Ray's 2017 release (Metascore of 87) is almost as good as St. Vincent's 2017 release (Metascore of 88), but St. Vincent’s album is, I don’t know, a trillion times better [1]. More recently, Metacritic rated an unspeakably bad album by Goat Girl as something that is worth your time (Metascore of 80). It is not worth your time.

So what’s going on?

What’s going on is publication bias. Music reviewers don’t publish a lot of negative reviews, especially of artists that are unknown. As evidence of this, consider that although Metascores theoretically range from 0-100, in practice only 16% of albums released in 2018 have received a Metascore below 70 [2]. This might be because reviewers don’t want to be mean to struggling artists. Or it might be because reviewers don’t like to spend their time reviewing bad albums. Or it might be for some other reason.

But whatever the reason, you have to correct for the fact that an album that gets just a few reviews is probably not a very good album.

How can we fix it?

What I’m going to propose is kind of stupid. I didn’t put in the effort to try to figure out the optimal way to correct for this kind of publication bias. Honestly, I don’t know that I could figure that out. So instead, I thought about it for about 19 seconds, and I came to the following three conclusions:

(1) We can approximate the number of missing reviews by subtracting the number of observed reviews from the maximum number of reviews another album received in the same year [3].

(2) We can assume that the missing reviewers would’ve given fairly poor reviews. Since it’s a nice round number, let’s say those missing reviews would average out to 70.

(3) Albums with metascores below 70 probably don’t need to be corrected at all, since reviewers already felt licensed to write negative reviews in these cases.

For 2018, the most reviews I observed for an album is 30. As you can see in the above figures, Beach House’s album received 27 reviews. Thus, my simple correction adds three reviews of 70, adjusting it (slightly) down from 81 to 79.9. Meanwhile, Modern Studies’s album received only 6 reviews, and so we would add 24 reviews of 70, resulting in a much bigger adjustment, from 86 to 73.2.

So now we have Beach House at 79.9 and Modern Studies at 73.2. That’s much better.

But the true test of whether this algorithm works would be to see whether Metascores become more predictive of consumers’ music evaluations after applying the correction than before applying the correction. But to do that, you’d need to have consumers evaluate a bunch of different albums, while ensuring that there is no selection bias in their ratings. How in the world do you do that?

Is it fixed?

Well, it just so happens that for the past 5.5 years, Leif Nelson, Yoel Inbar, and I have been systematically evaluating newly released albums. We call it Album Club, and it works like this. Almost every week, one of us assigns an album for the three of us to listen to. After we have given it enough listens, we email each other with a short review. In each review we have to (1) rate the album on a scale ranging from 0-10, and (2) identify our favorite song on the record [4].

The albums that we assign are pretty diverse. For example, we’ve listened to pop stars like Taylor Swift, popular bands like Radiohead and Vampire Weekend, underrated singer/songwriters like Eleanor Friedberger, a 21-year-old country singer who sounds like a 65-year-old country singer, a (very good) “experimental rap trio”, and even a (deservedly) unpopular “improvisational psych trio from Brooklyn” (Spotify .html) [5]. Moreover, at least one of us seems not to try to choose albums that we are likely to enjoy. So, for our purposes, this is a pretty great dataset (data .xlsx; code .R).

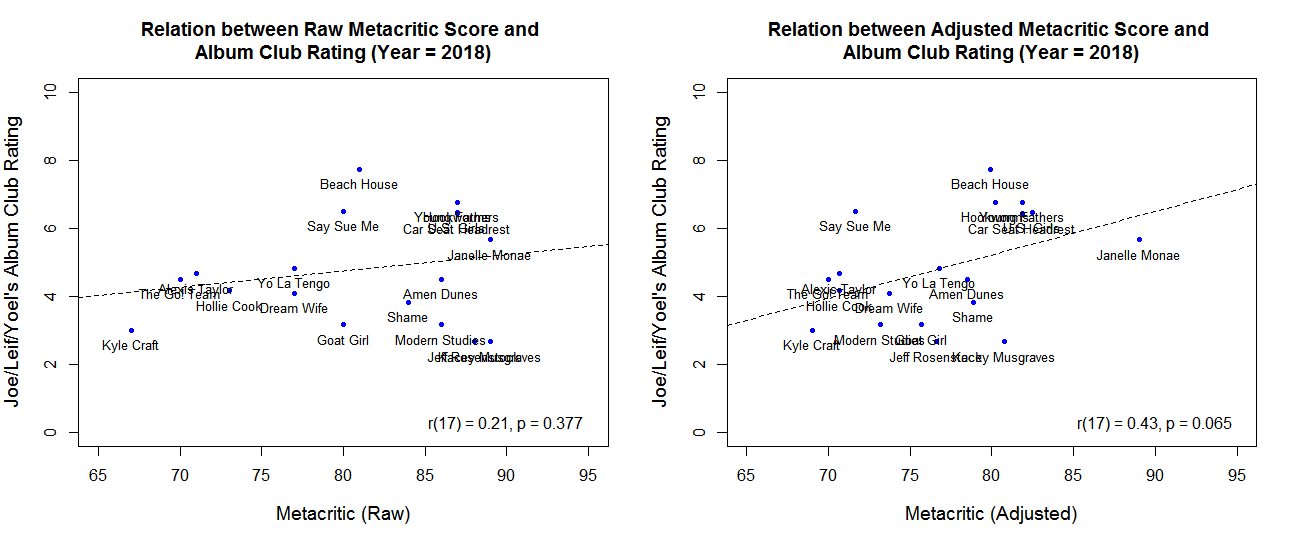

So let’s start by taking a look at 2018. So far this year, we have rated 22 albums, and 19 of those have received Metascores [6]. In the graphs below, I am showing the relationship between our average rating of each album and (1) actual Metascores (left panel) and (2) adjusted Metascores (right panel).

The first thing to notice is that, unlike Metacritic, Leif, Yoel, and I tend to use the whole freaking scale. The second thing to notice is the point of this post: Metascores were more predictive of our evaluations when we adjusted them for publication bias (right panel) than when we did not (left panel).

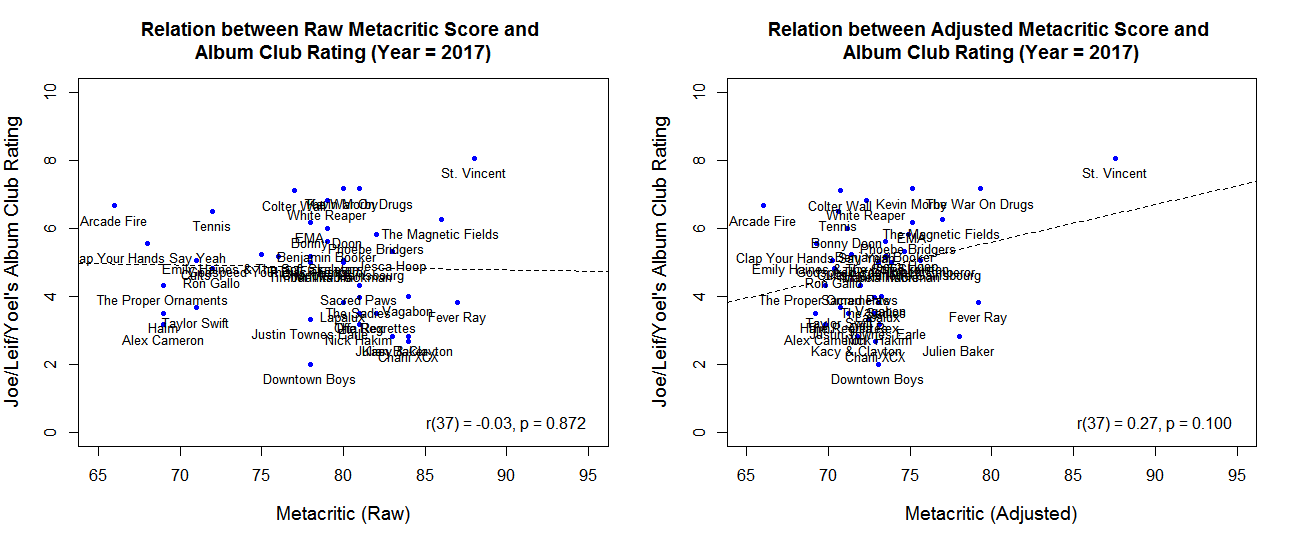

Now, I got the idea for this post because of what I noticed about a few albums in 2018. Thus, the analyses of the 2018 data must be considered a purely exploratory, potentially p-hacked endeavor. It is important to do confirmatory tests using the other years in my dataset (2013-2017). So that’s what I did. First, let’s look at a picture of 2017:

That looks like a successful replication. Though Metacritic did correctly identify the excellence of the St. Vincent album, it was otherwise kind of a disaster in 2017. But once you correct for publication bias, it does a lot better.

That looks like a successful replication. Though Metacritic did correctly identify the excellence of the St. Vincent album, it was otherwise kind of a disaster in 2017. But once you correct for publication bias, it does a lot better.

You can see in these plots that the corrected Metacritic scores are closer together on the “Adjusted” chart, indicating that the technique I am employing to correct for publication bias reduces the variance in Metascores. Reducing variance usually lowers correlations. But in this case, it increased the correlation. I take that as additional evidence that publication bias is indeed a big problem for Metacritic.

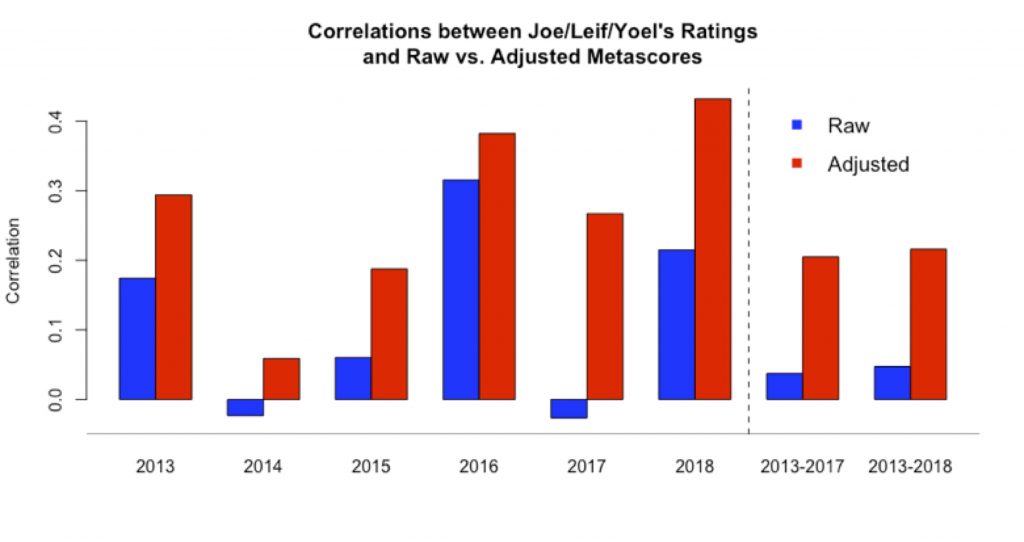

So what about the other years? Let’s look at a bar chart this time:

The effect is not always big, but it is always in the right direction. Metascores are more predictive when you use my dumb, blunt method of correcting for publication bias in music reviews than when you don’t.

The effect is not always big, but it is always in the right direction. Metascores are more predictive when you use my dumb, blunt method of correcting for publication bias in music reviews than when you don’t.

In sum, you should listen to the new Beach House album. But not to the new Modern Studies album.

![]()

Footnotes.

- I am convinced that Fever Ray’s song “IDK About You” was the worst song released in 2017. I tried to listen to it again after typing that sentence, but was unable to. Still, Fever Ray is definitely not all bad. Their best song “I Had A Heart” (released in 2009) soundtracks a dark scene in Season 4 of “Breaking Bad” (.html). [↩]

- 59 out of 307 [↩]

- The same year is important, because the number of reviews has declined over time. I don’t know why. [↩]

- In case you are interested, I’ve made a playlist of 25 of my favorite songs that I discovered because of Album Club: Spotify .html [↩]

- Not to oversell the amount of diversity, it is understood that assigning a death metal album will get you kicked out of the Club. You can, however, assign songs that are *about* death metal: Spotify.html [↩]

- Some albums that we assign are not reviewed by enough critics to qualify for a Metascore. This is true of 59 of the 261 albums in our dataset. This is primarily because Leif likes to assign albums by obscure high school bands from suburban Wisconsin, some of which are surprisingly good: Spotify .html. [↩]