A new paper finds that people will donate more money to help 20 people if you first ask them how much they would donate to help 1 person.

This Unit Asking Effect (Hsee, Zhang, Lu, & Xu, 2013, Psychological Science) emerges because donors are naturally insensitive to the number of individuals needing help. For example, Hsee et al. observed that if you ask different people how much they’d donate to help either 1 needy child or 20 needy children, you get virtually the same answer. But if you ask the same people to indicate how much they’d donate to 1 child and then to 20 children, they realize that they should donate more to help 20 than to help 1, and so they increase their donations.

If true, then this is a great example of how one can use psychology to design effective interventions.

The paper reports two field experiments and a study that solicited hypothetical donations (Study 1). Because it was easy, I attempted to replicate the latter. (Here at Data Colada, we report all of our replication attempts, no matter the outcome).

I ran two replications, a “near replication” using materials that I developed based on the authors’ description of their methods (minus a picture of a needy schoolchild) and then an “exact replication” using the authors’ exact materials. (Thanks to Chris Hsee and Jiao Zhang for providing those).

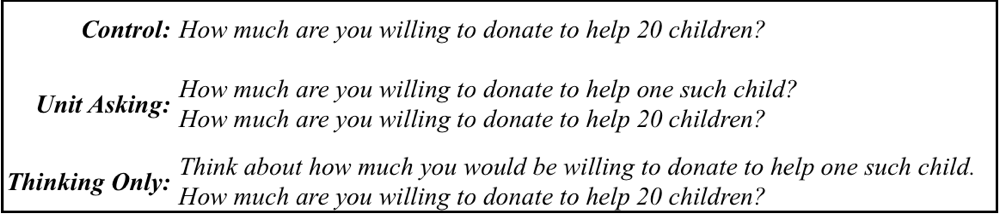

In the original study, people were asked how much they’d donate to help a kindergarten principal buy Christmas gifts for her 20 low-income pupils. There were four conditions, but I only ran the three most interesting conditions:

The original study had ~45 participants per cell. To be properly powered, replications should have ~2.5 times the original sample size. I (foolishly) collected only ~100 per cell in my near replication, but corrected my mistake in the exact replication (~150 per cell). Following Hsee et al., I dropped responses more than 3 SD from the mean, though there was a complication in the exact replication that required a judgment call. My studies used MTurk participants; theirs used participants from “a nationwide online survey service.”

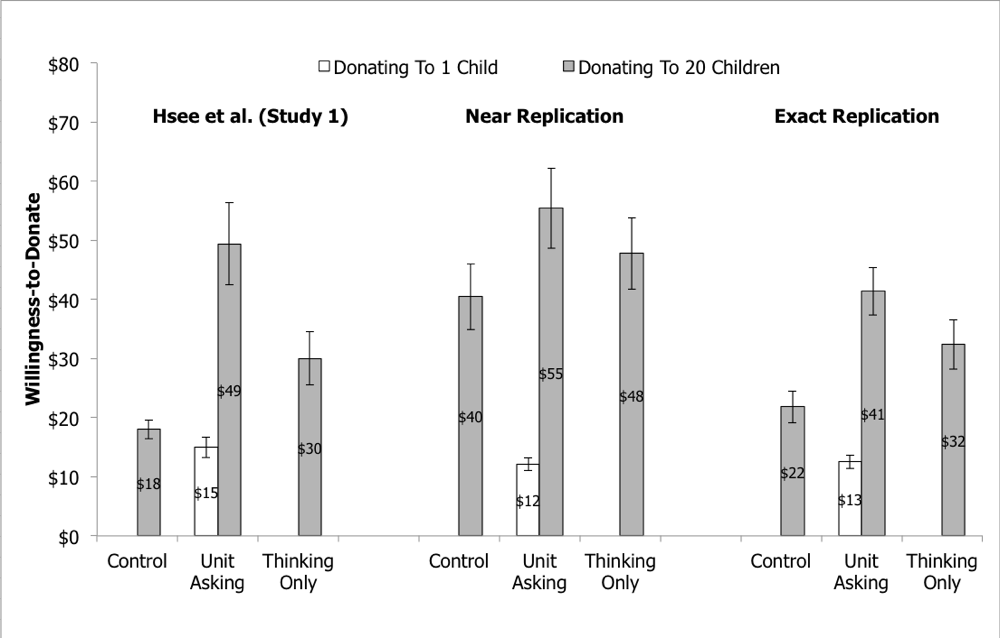

Here are the results of the original (some means and SEs are guesses) and my replications (full data).

I successfully replicated the Unit Asking Effect, as defined by Unit Asking vs. Control; it was marginal (p=.089) in the smaller-sampled near replication and highly significant (p< .001) in the exact replication.

There were some differences. First, my effect sizes (d=.24 and d=.48) were smaller than theirs (d=.88). Second, whereas they found that, across conditions, people were insensitive to whether they were asked to donate to 1 child or 20 children (the white $15 bar vs. the gray $18 bar), I found a large difference in my near replication and a smaller but significant difference in the exact replication. This sensitivity is important, because if people do give lower donations for 1 child than for 20, then they might anchor on those lower amounts, which could diminish the Unit Asking Effect.