This post is the second in a series (.htm) in which we argue that meta-analytic means are often meaningless, because these averages (1) include invalid tests of the meta-analytic research question, and (2) aggregate incommensurable results. In each post we showcase examples of (1) and (2) in a different published meta-analysis. We seek out meta-analyses that we believe to be typical, rather than unusual, in how problematic they are.

As in our first post, here we consider a meta-analysis of nudging, this one performed by economists, across two published papers. The first, published in 2019 in the Journal of Behavioral and Experimental Economics ("JBEE", .htm), explored effect-size heterogeneity across nudge types. The second, published in 2022 in Econometrica (.htm), re-used a subset of those studies plus studies from other sources, to compare 'the effect' of nudging in academic publications (8.7%) vs. government-sponsored trials (1.4%).

Studies discussed below appeared in the academic publications and were included in both papers.

1) Invalid tests of the meta-analytic question

The authors of the Econometrica article posted a spreadsheet (.xlsx) containing all effect size estimates from the academic studies that they aggregated to produce their average effect of 8.7%. We sorted that spreadsheet by effect size and read the original articles starting from the one with biggest effect size. After five papers, we had three examples of invalid results and stopped looking for more.

(The JBEE dataset is not posted, but we obtained it from the authors (.xlsx)).

Invalid Study #1. The three largest effects are (about) bananas.

The three largest effects in the meta-analysis all come from Study 1 of a 2017 Journal of Business Research paper (.htm), which is described as taking place in a Swedish supermarket. The study explored interventions aimed at getting customers to buy eco/organic bananas rather than regular bananas.

A confederate dressed as an employee stood 10 meters from the bananas, intercepting customers to read 1 of 4 messages to them. After asking “Are you going to buy bananas?” (p. 92) and getting an affirmative answer, the message delivered by the confederate was one of these (each treated as a different 'nudge' in the meta-analyses):

1. “We have environment friendly labeled and non-environment friendly bananas right over there.”

2. “Our eco-labeled bananas are situated right next to our employee standing there.”

3. “You seem interested in eco-labeled products – you can find them here.”

4. “Our eco-labeled bananas are priced no higher than any competing brands without labels.”

Compared to a control condition, the share of banana sales that were organic went up 15, 43, 51, and 51 percentage points, respectively. These last three effect sizes are (literally) off-the-charts, as the Econometrica article's Figure 3 plots all effect sizes except these 3 because they do not fit on the y-axis. Let’s make sure everyone understands the finding: merely informing people that eco/organic and regular bananas have the same price – something they would presumably learn anyway once they inspect the posted prices when grabbing the bananas – tripled the share of the eco/organic bananas from 25% to 76%. Removing this single paper from the meta-analysis drops the average effect of nudging in academic papers from 8.7% to 6.4%, a 26% decrease [1].

We have two concerns about this study's validity. First, participants were not randomly assigned to the control vs. treatment conditions. The authors did change the treatment every 30 shoppers, "in order to counterbalance the effects of time of day and day of the week" (p. 93), but they did not do this for the control condition. Instead, they compared the treatments to a baseline based on “sales data from the previous week” (p. 92), and that baseline served as the control. Differences between control and treatments therefore lack internal validity. For example, maybe the eco/organic bananas were browner or pricier last week or maybe people with different preferences show up at different times. Our second concern is that the data collection is described with insufficient detail to understand the possible role of confounds, demand effects by the experimenter, or perhaps more serious anomalies. Given the off-the-charts effect sizes, we believe at least one of these problems is likely present. We relegate this discussion to a footnote since the first concern, lack of internal validity, is enough to merit exclusion from a meta-analysis [2].

(Pictures not in the original paper)

(Pictures not in the original paper)

Invalid Study #2. The 4th largest effect is by someone guilty of research misconduct.

The fourth largest effect comes from a study (.htm) conducted by Mr. Wansink, formerly a full professor at Cornell, who left academia after an official university investigation found him guilty of many forms of academic misconduct, “including data falsification” (source: Cornell provost public statement .htm).

We believe that papers authored by fraudsters should be treated as invalid unless a co-author actively and explicitly vouches for their validity (putting their reputation behind it). In early November 2022, we reached out to Wansink's only co-author on this paper, asking if he would vouch for the study's validity. We then shared with him a draft of this post. As of this writing he has not responded [3].

Invalid Study #3. The 7th largest effect is a transcription error

Looking at the largest effects in meta-analyses can be an efficient way to find invalid studies, but not all large effects are invalid, nor are all invalid effects large. Here the 5th and 6th largest effect seemed like valid tests of the meta-analytic question. But the 7th, discussed below, did not.

A study published in JAMA Pediatrics in 2015 (.htm), investigated an intervention designed to increase fruit and vegetable consumption in school cafeterias. The study involved 12 public schools, using a 2 (presence/absence of nudges) x 2 (presence/absence of professional chef) experimental design [4]:

- Control: neither treatment

- Treatment 1: Nudges (e.g., fruits in attractive containers; prominent signs advertising fruits and vegetables)

- Treatment 2: Professional chef changes all food offerings (see sample cookbook: .pdf) [5]

- Both: Nudges and professional chef

Two comparisons provide valid estimates of “the effect” of nudging: (2)-(1) and (4)-(3).

Both meta-analyses correctly include: (2)-(1), good for a +3% effect.

The Econometrica meta-analysis incorrectly includes also (4)-(1), good for a +27% effect.

That (4)-(1) comparison confounds the effect of a nudge with the effect of a new health-conscious chef.

In addition, both meta-analyses chose the wrong numbers for those effects, as that 3% and 27% come from comparisons between conditions only after the experiment was run, ignoring differences between conditions that were nearly as large before the experiment. That 27% should actually be 5% (see footnote [6]).

Interlude: what's our generalizable claim?

It is worth making explicit what we are and are not claiming here. We are not saying "look, these three studies are invalid, so we now believe most studies in these nudge meta-analyses are invalid". We selected our examples from studies with some of the biggest effects, and we know those are more likely to be invalid. What we are saying is "look, this meta-analysis includes multiple studies that represent invalid tests of the meta-analytic research question. We believe this is typical of most meta-analyses."

After sharing a draft of this post with the authors of the Econometrica paper, they did a further check of data quality, going beyond these 7 results to check the top 37 (out of 74) effects. They found only one additional study that may merit exclusion. See the blue font at end of post for their interpretation of this result.

(2) Incommensurable results

Let's now move on to our second concern with meta-analytic averages, that they combine effects that do not make sense to combine.

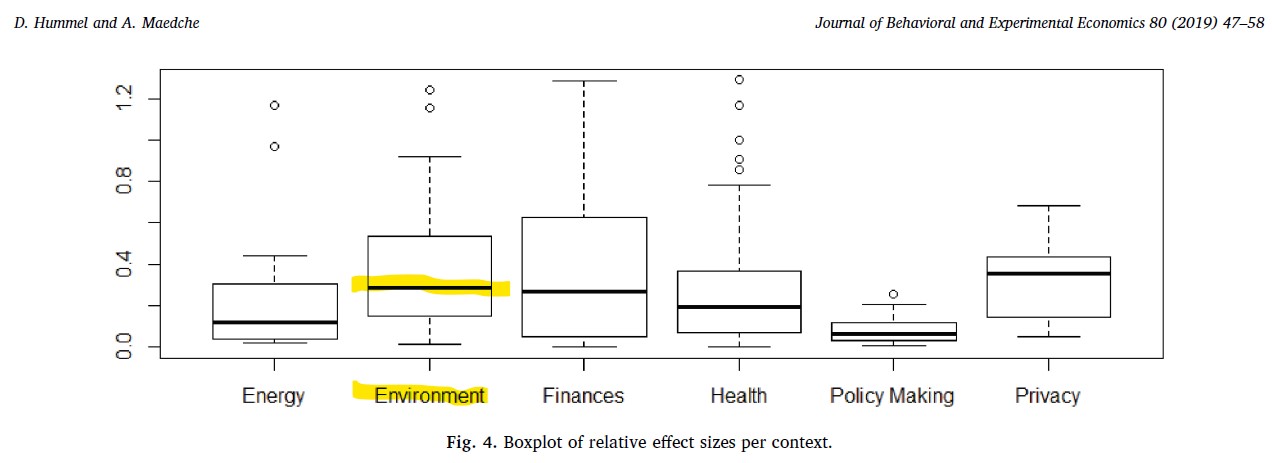

Both meta-analyses considered the fact that there is heterogeneity across nudge studies. In fact, such heterogeneity is the question of interest to the authors of the JBEE article. But, in our opinion, they did not – and probably could not – do this in a way that produced meaningful (sub)means. For example, the JBEE article breaks down “the effect” of nudging by “context” (their Figure 4 is reprinted below):

Are those subgroup means meaningful? Do they average over commensurable results?

Let's audit the three "Environment" studies that were included in both meta-analyses (the JBEE meta-analysis included several additional 'environment' nudge papers).

Environmental Study #1: Junk mail

In an Economic Letters papers published in (2015, .htm) the authors attempted to increase the share of homes with a “no junk mail” sticker on their mailbox. They sent stickers to 800 homes and randomly assigned them into receiving 1 of 4 messages of increasing strength: (1) control, no message, (2) an explanation that junk mail causes lots of waste, (3) added that in 2 weeks the researchers would monitor if a sticker was placed on their homes, and (4) added a small gift if they did. The shares of homes with stickers were 22%, 25%, 29% and 35%.

Environmental Study #2: Flying economists

In a paper published in the Journal of Environmental Economics and Management (2012 .htm), the authors reported an experiment seeking to increase the share of economists willing to pay a fee to carbon-offset their travel to an upcoming conference. Economists who had to opt-in to pay the fee paid it 39% of the time, whereas those who had to opt out of paying the fee paid it 44% of the time.

Environmental Study #3: Again, bananas

The bananas study was part of the environmental mean.

Meaningless environmental mean

While these three studies are loosely speaking related to “the environment”, it’s unclear to us how to decipher the meaning of the mean that combines the effect of (1) telling people all bananas cost the same on the share of eco bananas purchased, (2) telling households a researcher is coming to check their stickers on placing said stickers, and (3) defaulting academics into a CO2 fee on paying that fee. The incommensurability problem is made worse by the fact that readers of the meta-analysis don’t even know that those are the studies that are being averaged. All they know is many studies loosely related to the environment have an average effect of d = .3.

To put this problem in perspective, imagine an article tells you that 30% of voters supported an environmental policy, but not which policy. Would a newspaper publish an article saying that? Would you want to read that article?

![]()

Author feedback:

Our policy (.htm) is to share drafts of blog posts with authors whose work we discuss, in order to solicit suggestions for things we should change prior to posting, and to invite them to write a response that we link to at the end of the post. We contacted (i) the authors of the Econometrica and JBEE meta-analyses, (ii) the authors of the 5 individual papers we discuss.

1) The authors of the Econometrica article, as mentioned above, did additional work re-examining other studies in the meta-analysis, and provided a thoughtful response. Their full reaction touches on points of agreement and disagreement with this post. They also sent this quote to include in this post

"This [their re-analysis of the biggest 37 results] confirms that the invalid effect sizes are concentrated at the top, an important lesson for future studies. After dropping the problematic studies, we recalculate the average effect size from 8.7 pp. to 6.4 pp. In our paper, we compare nudge papers in the literature, which is the focus of this post, to interventions by Nudge Units, which this post does not consider. The effect size one would glean from nudge papers in the literature, 6.4 pp. in the revised calculation, is still significantly larger than the 1.4 pp. for Nudge Units, a difference that in the paper we largely attribute to publication bias, as well as differences in features of the studies."

Their full response: (.pdf).

2) The first author of the eco bananas paper, Per Kristensson, after answering some of our questions about how the data were gathered, shared his thoughts on their paper in light of our post, noting among other things that the baseline "is not optimal and represents a potential bias (but at least gave us some comparable data…)". See full response (.pdf)

3) The authors of the paper on CO2 offset fees responded but did not suggest any changes. The authors of the JBEE meta-analysis did not respond, nor did the other authors of other original papers mentioned above.

Footnotes.

- Here we are dropping effects from both Studies 1 and 2. Study 2 involves putting the above messages on a sign instead of having a confederate read them out loud. Effects were still very large – 14, 19, and 19 percentage points – but of course smaller than those reported in Study 1. We compute the means in Excel giving equal weight to each study, this is extremely close what a 'random effects' meta-analysis does [↩]

- Bananas study (on the insufficient details on data collection).

We are puzzled by how this experiment could have generated the data that is reported in the paper. We discuss three issues.Eco/organic vs regular. First, it is not clear how the authors could have known what type of bananas people purchased, eco/organic vs. regular, since the confederate was about 10 meters away from shoppers when they were picking their bananas. The store would not be able to give researchers these data, because only the confederate knew what condition shoppers were assigned to (also, a shopper could presumably buy bananas after avoiding the confederate altogether). We emailed the authors asking how the data were gathered. Per Kristensson replied, on September 5th, 2022, indicating that they received the data from the "Confederate who took notes" but also "directly from the register during the dates we spent time in the store". In a later email he indicated that there was a second confederate who changed clothes between conditions. That's the one who would have taken the notes.

Baseline data. Second, it is unclear to us just what data were used for the baseline. The paper does not say anything about it. Oddly, however, the statistical results in the paper imply there were also 100 observations in the baseline (the paper reports that comparing baseline (unknown n) with treatments (n=400), which are 25% vs 40%, leads to p=.01 in a chi2 test. Solving for n, we get 83< n <112 (R Code). Why would the store give researchers only 100 rows of data from an entire week of sales to use as baseline? In another email, when we asked about n=100, we were told that those baseline sales actually constitute all the sales during the previous week, and the share of eco bananas corresponds to the relative weight of eco bananas sold for the week (so the authors would have compared the share of bananas at baseline vs. share of customers buying eco bananas in the treatments). But this appears to be inconsistent with the results in the paper, which, again, imply n=100 in the control.

Multiple of 30. Lastly, and more minor, the paper indicates that condition assignment was changed after every 30 shoppers, but sample sizes are all n=100, not a multiple of 30. When asked about this, the authors indicated that when a condition had n=100, they stopped assigning participants to it.

[↩]

- This is the only Wansink study in the Econometrica article; the JBEE article includes another one (with the same co-author). The meta-analysis discussed in our previous post (Colada[105]) included several Wansink articles, but because the authors of that meta-analysis reported the results both with and without those studies, we did not discuss this issue in that post. [↩]

- The design was a bit more complex, using a staggered introduction of treatments across schools, so every school started with no treatment and ended with both. [↩]

- Details on chef's intervention in JAMA paper. It reads (p. 432) "The recipes were created using cost-effective commodity foods available to schools and incorporated whole grains, fresh and frozen produce […] The students were repeatedly exposed to several new recipes on a weekly basis during the 7-month intervention period." The text edited out in […] includes the link to the cookbook that we provide in our main text. Nudging is a broad and ill-defined category, but it is hard to argue this type of intervention would qualify as a nudge. [↩]

- Wrong numbers chosen from the JAMA paper: The authors of the JAMA paper ran the study with just 12 schools, randomizing at the school level. The schools have very large pre-study differences that the JAMA authors attempt to control for, but neither meta-analysis does. Specifically, the meta-analysts selected as the effects of treatment the differences between conditions after the experiment, without controlling for those before differences (they did a diff, instead of a diff-in-diff). To give you a sense of the magnitudes involved, let's look at the (4)-(1) comparison that was included only in the Econometrica article.

Before the experiment, (4) vs(1) schools differed by 21.6% (see JAMA Table 2 .png)

After the experiment, (4) vs (1) schools differed by 27.0% (see JAMA Table 4 .png)Thus, the effect is only 5.4% (27-21.6), but the meta-analysts used 27% as the effect.

Again, the JAMA paper controls for baseline, only the meta-analysts do not. Lastly, for an unstated reason, the Econometrica meta-analysis includes only the effect on fruits, not vegetables, and the JBEE meta-analysis includes only (2)-(1), not (4)-(3). All results are presented side by side in the JAMA paper. [↩]