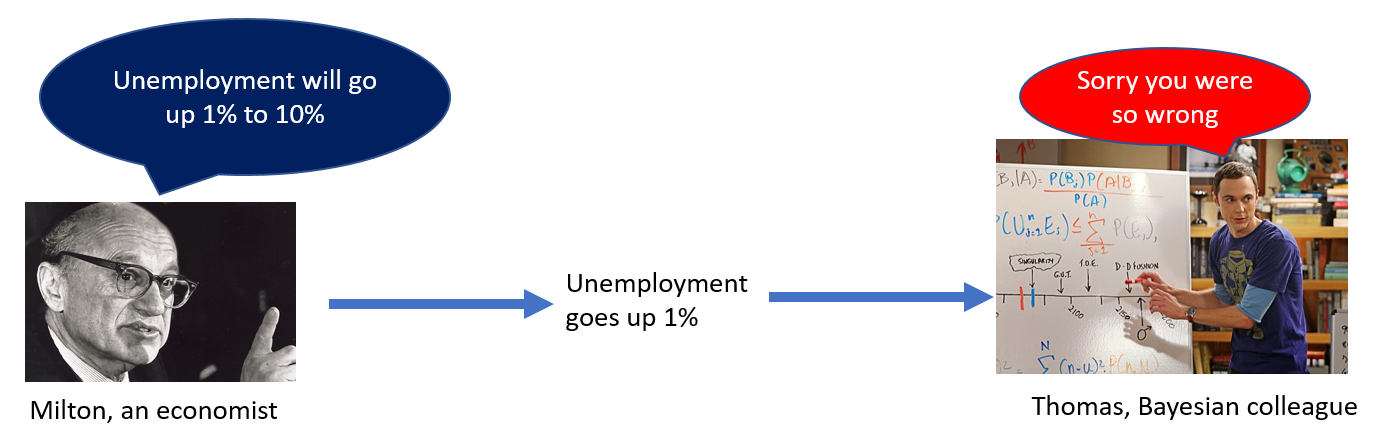

Would raising the minimum wage by $4 lead to greater unemployment? Milton, a Chicago economist, has a theory (supply and demand) that says so. Milton believes the causal effect is anywhere between 1% and 10%. After the minimum wage increase of $4, unemployment goes up 1%. Milton feels bad about the unemployed but good about his theory.

But then, Thomas, a Bayesian colleague, tells Milton to wipe that smile on his face, because his prediction was wrong. The Bayes factor for that 1% increase in unemployment ‘supports the null’ of no effect of wage on unemployment.

In this post I unpack this realistic scenario to explain two of the many reasons I don't use Bayes factors in my research.

Note: The numbers in this example were slightly changed a few hours after posting in response to an email from Zoltan Dienes (.htm). See footnote for details [1]. I also archived original post (.html).

What Bayes Factors Do

Bayes factors provide the results of a horse-race. They tell us how much more consistent the data are with an alternative hypothesis than with the null hypothesis. For example, if a pregnancy test is 10 times more likely to come up positive when pregnant than when not, then the Bayes factor is BF=10 for a positive result.

The BF for the pregnancy test is simple because the alternative hypothesis involves only one possibility: “being pregnant”. But BFs are usually complicated because the alternative hypotheses they consider include many possibilities.

In the minimum wage example, the alternative hypothesis isn’t just that “the minimum wage matters”. To compute a Bayes factor we need to specify how much we think that it matters. Or actually, how likely each possible effect size is. How likely is a 2% increase in unemployment, how likely a 2.1%, a 5%, a 5.1%, etc.

It seems impossible to use a social science theory like supply and demand, to meaningfully generate such numbers. But let’s try it so that this post is not overly short.

Milton said the effect may be “anywhere between 1% and 10%”. Let’s take that unreasonably literally to mean: “my belief about the true effect on unemployment is captured by a uniform distribution between 1% and 10%.” [2].

With our uniform distribution in hand, we compute the Bayes factor by asking if the observed effect, 1%, is closer to the null of 0%, or to “the alternative”, the uniform 1%-10%. If it is sufficiently closer to 0%, we "accept" the null.

Interlude

Bayes factors, then, compare the null to “the alternative”. Implementing this comparison requires doing two things that I think most researchers would object to, if they realized that that’s what they were doing when they reported Bayes factors in their papers.

Thing 1. Falsifying something we never tested

To compute a Bayes factor we need to generate “the alternative” hypothesis. In social science, theory alone will not deliver one. So we need a substitute for the actual theory to be compared to the null.

Bayesian advocates recommend a variety of substitutes for generating “the alternative”. For example, that we rely on our intuition of how big the effect is, on previous findings, on what we can afford to study, etc. [3].

Note: By theory I merely mean the rationale for investigating the effect of x on y. A theory can be as simple as “I think people value a mug more once they own it”.

Imagine I am the first person to investigate whether arbitrary anchors affect the perceived length of rivers. I want to compute a Bayes factor, so I need to specify “the alternative” hypothesis. To do that I need to convert the theory “higher anchors lead to higher guesses of river lengths” into a distribution that assigns a likelihood to every possible true anchoring effect size. I am as clueless as you are at figuring out what in the world that distribution is, so I follow the advice from some Bayesian papers and I look up similar previous findings. I find studies of anchoring on perceived door height, and the average effect size of those is d̂=.8. So I use as “the alternative” hypothesis one that gives d=.8 some reasonable prior probability.

I run my study and get d̂=.24, p<.0001.

While d̂=.24 is not nothing, it happens to be closer to the null of 0 than to that “the alternative”, on average. So the Bayesian analysis tell me to “accept the null.”

But I have not falsified anchoring. I have not even falsified anchoring for rivers.

All I have falsified is the irrelevant hypothesis that anchoring for rivers is like anchoring for doors.

All I have falsified is a hypothesis I considered only so that I could run the Bayes factor, one I was never interested in.

Thing 2: Rejecting theories that predict what we observe

What would it take for you to conclude that the minimum wage does not increase unemployment? If you are like me, it would be something like this “if the estimated effect on unemployment were close enough to zero to rule out consequential effects, I will accept the null.”

That's easy enough to do with a confidence interval, but it is not at all what Bayes Factors do.

Bayes factors don’t compare the observed effect to the smallest effect of interest.

Bayes factors compare the observed effect to every effect that is consistent with ‘the alternative’.

That’s why a BF can interpret a 1% effect on unemployment as falsifying the prediction that the effect will be anywhere between 1% and 10%. Unlike you and I, the Bayes factor does not ask “is 1% within the predicted range%?” The BF asks:

“How likely is it that we would observe a 1% effect, if the true effect were 10.0%?”, and

“How likely is it that we would observe a 1% effect, if the true effect were 9.9%?”, and

..

“How likely is it that we would observe a 1% effect, if the true effect were 1.0%?”

It then averages all those numbers. And, if that average is sufficiently small, it ‘accepts the null’.

In other words, because observing an effect of 1% is unlikely if the true effect were 10% or 7% or 5%, the BF “accepts the null,” even though the observed effect was within the predicted range.

If Milton had said “the effect is anywhere between 1% and 2%” then observing 1% would “support the alternative.”

If Milton had said “the effect is anywhere between -50% and +50%”, a prediction that is never false, the Bayes factor would always deem it false, because observed values would always be, on average, unlikely, under ‘the alternative.’

The Bayes factor approach to falsification, averaging how well every possible prediction fits the data, instead of whether the data is or is not consistent with a prediction, is different from how we falsify predictions in everyday life, in the scientific method, and when relying on standard statistical approaches.

What if “the alternative” hypothesis were not a distribution of values that we had to average over?

See this footnote [4].

OK, but how often does it actually happen that a Bayes factor deems a prediction within the set of hypothesized values as falsifying the hypothesis? Basically always. At least with default Bayes factors, every time the null is supported, the value we observe is predicted by the alternative. See footnote [5].

In sum.

To use Bayes factors to test hypotheses: you need to be OK with the following two things:

1. Accepting the null when “the alternative” you consider, and reject, does not represent the theory of interest.

2. Rejecting a theory after observing an outcome that the theory predicts.

This post, 78a, is the first in a series on Bayes factors. Post 78b is coming soon.

![]()

Footnotes.

- The original example had Milton predict 1%-6% and the truth come up 1.5%. Zoltan noted that a sensible operationalization of that description relying on the normal distribution, and if the 1.5% were statistically significantly different from 0, would lead to an inconclusive result that leans towards the alternative actually. I originally chose the values haphazardly just to convey the intuition and did't think of approaching the example quantitatively, I should have. Sorry. And should have chosen an example that lead to a stronger paradox. Sorry again. In any case, after his email I tweaked the numbers so that a natural operationalization does lead to supporting the null. The R Code to see the original and modified example is posted here [↩]

- I keep saying true effect because “the alternative” is not about what effect you will get in your sample, the estimated effect, it is about what is the true effect in the world. [↩]

- Most commonly, however, the alternative hypothesis used in Bayes factors calculations reflects neither theory nor expectations. The alternative used in almost every Bayes factor you have seen, or will see, involves an arbitrary default distribution, e.g., that the effect is normally distributed with M=0 and SD=.7. This is a generic ad-hoc mathematization of what a generic effect may be, taking into account neither data nor theory. Note that this default “alternative” includes an effect of zero. Indeed, it considers it the most likely effect size. So the null is zero, and ‘the alternative’ also states the effect may be zero. [↩]

- Bayes factors can be used with “degenerate distributions” of single value alternative. e.g., are the data more consistent with an unemployment effect of 0% or of 1%? These simpler Bayes factors:

(1) Don’t suffer from the problems explained in this post (as long as that single alternative value corresponds to the smallest effect of interest to the theory, or the user of the theory),

(2) Are equivalent to a (non-Bayesian) likelihood ratio test,

(3) Are easier to compute,

(4) Are usually objected to by Bayesian advocates for philosophical reasons I find lacking in merit (they think of the alternative as actually capturing prior beliefs, and we should not give probability of zero to possible outcomes in our beliefs. I find it lacking in merit because the alternative they use is not really about beliefs anyway, so what's the point of demanding nice properties that beliefs should have from something that is not a belief anyway), and

(5) I think could be used instead of traditional Bayes factors, and *in addition* to p-values. My next post in this series, Colada78b, is about this. [↩] - The default "the alternative", e.g., the one used by the BayesFactors R package, involves a distribution centered at zero, and where every real number is possible, thus any observed value is consistent with the alternative defined this way, and therefore, every time you accept the null, you have observed a value that 'the alternative' gives some probability to being true. But this does not need to be the case. The alternative could be just one value (see previous footnote). Also, the null could be defined around zero not exactly at zero so here some observed values would belong to the null and not to the alternative. But, even in this latter case, it will still quite often be the case that a value predicted by the alternative and not by the null will lead the Bayes factor to support the null [↩]