A recent NBER paper titled "Gender and the Dynamics of Economics Seminars" (.htm) reports analyses of audience questions asked during 462 economics seminars, concluding that

“women are asked more questions . . . and the questions asked of women are more likely to be patronizing or hostile . . . suggest[ing] yet another potential explanation for their under-representation at senior levels within the economics profession” (abstract)

In this post I explain why my interpretation of the data is different.

My prior, before reading this paper, was that women were probably treated worse in seminars, especially in economics. But, after reading this paper I am less inclined to believe that.

Data Collection

The data were collected by 77 volunteers, mostly econ PhD students (73% female, many recruited in a diversity conference), who took notes while attending the seminars. Few data collection teams would be better able to identify possibly discriminatory behavior.

At the outset, I should say that I very much appreciate that this paper: (1) involved such an impressive data collection effort, (2) helps answer a policy-relevant question, and (3) presents the results with sufficient thoroughness as to allow readers (like myself) to make up their own minds based on the collected facts.

The Optimal Number of Questions To Get Is Not Zero.

The statistically strongest result in the paper is that women get about 3 more questions on average than do men (see Table 3, column 6 .png).

The authors assume that this is bad for female speakers, but is it? It depends.

If it means that female speakers get asked too many questions, it is indeed bad for them.

If it means that female speakers don’t get asked too few questions, it is actually good for them.

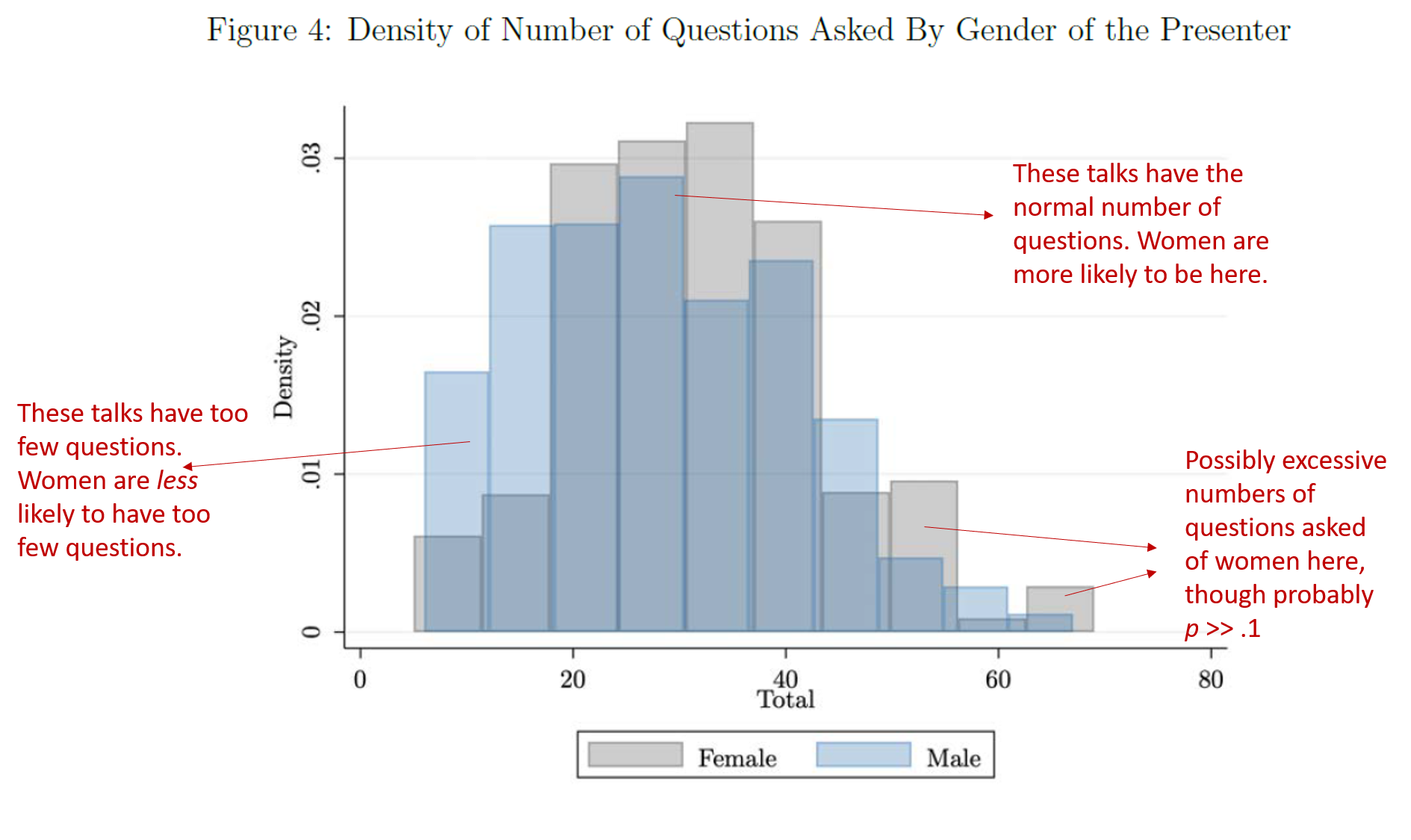

Figure 4 in the paper, shown below with my annotations added in red, helps us think about which of these two scenarios is at play.

The average number of questions asked is about 30 (most seminars in the sample last 80 or 90 minutes).

The figure shows that most of the gender difference in questions comes from the low tail [1]. Men are 2 to 3 times more likely than women to get very ‘few’ questions.

If I were giving a talk to economists, and were asked which distribution I would prefer, I would choose the female speakers'. I prefer not giving talks with very few questions.

Do Female Speakers Get More Antagonistic Questions?

Another result highlighted in the abstract is that the questions female speakers get “are more likely to be patronizing or hostile”.

Unlike the optimal number of total questions, the optimal number of hostile and patronizing questions is zero. So noticeable differences in hostility are easier to interpret.

But the evidence behind those claims seems insufficiently clear, in my opinion, to be interpretable, let alone actionable. Specifically, the evidence is:

- Statistically weak. The estimates are arguably small in magnitude (e.g, women get 0.1 extra hostile questions on average), and evidentially weak (the patronizing difference is p=.1, the hostile difference p=.02). Moreover, these two results were selected post-hoc from a larger set of measures collected, and the rest were not significant (e.g., if questions were critical, or disruptive, or fair). Statistically at least, this is not strong evidence against the null that all observed differences are caused by chance.

- Conceptually inconclusive. Other estimates in the paper are conceptually inconsistent with the conclusion that female speakers receive worse treatment. For example, they get directionally fewer “criticism” questions than do male speakers (see Table 6 .png). While this result is p > .1, so we do not rule not zero difference, the estimate is precise enough to rule out that women get ¼ additional critical question per talk. Women also get an additional 1.7 clarification questions (p < .01), and half an additional suggestion (p < .1). Personally I like getting these kinds of questions, as they often signal audience engagement and can, of course, be useful.

A pitch to economists: check out AsPredicted.org

While I was reading this paper, with its many measures and arguably surprising results, I found myself wishing that the study had been pre-registered. However, as the authors pointed out in my discussion with them, pre-registration in economics is only the norm for large field experiments (RCTs). So it is not remarkable or even notable that this study was not pre-registered; so few studies like this are. Nevertheless, I do think pre-registration should be much more common in economics. Economists: click the button to see my pitch.

In sum

1. The data reported in this paper show that female speakers are less likely to get too few questions. That is probably good for them.

2. The data are inconclusive regarding whether male vs. female speaker receive a disproportionate number of hostile, critical, or helpful questions. They do suggest, however, that any such differences are likely to be small.

![]()

Author feedback

Our policy (.htm) is to share drafts of blog posts that discuss someone else's work with them to solicit suggestions for things we should change prior to posting. The authors politely pointed out a fact I had misunderstood from their paper (I thought some data were missing, but they weren’t), and pointed out that in economics pre-registration (except for RCT studies) is unusual (this prompted the AsPredicted pitch).

One author remarked that “we are not taking a stand on the optimal number of questions. If we had found that women got *fewer* questions we'd have written the same paper. The main take-away is that the culture is not gender neutral. It is indicative of (implicit) bias. Audiences are not blind to gender.” Another author pointed out that in their view it is not clear if getting more clarification questions is good or bad, as it could mean that people are not paying attention or listening. They also pointed that for this reason they were “very careful not to provide interpretations when we don't have them or know” The exchange was as polite, prompt, and constructive as they come. The authors approved of me posting these quotes.

I truly appreciate their feedback.

Footnotes.- It is possible the pattern shown in the figure captures observed differences between men and women presenters, e.g., what field they are in. The authors address this issue by adding covariates in their regressions, but not in this descriptive chart. Remaking this plot for the residuals from the regression in their Table 3 Column 6 (.png), without gender as a predictor, would help understand how much of the gender difference in the distribution of questions asked is attributable to observed differences. I assume that little would change because the coefficient for the gender difference changes little from Column 1 (b=3.5), without covariates, to Column 6 (b=3.3) with covariates; but I don’t know for sure. [↩]