It is common for researchers running replications to set their sample size assuming the effect size the original researchers got is correct. So if the original study found an effect-size of d=.73, the replicator assumes the true effect is d=.73, and sets sample size so as to have 90% chance, say, of getting a significant result.

This apparently sensible way to power replications is actually deeply misleading.

Why Misleading?

Because of publication bias. Given that (original) research is only publishable if it is significant, published research systematically overestimates effect size (Lane & Dunlap, 1978). For example, if sample size is n=20 per cell, and true effect size is d=.2, published studies will on average estimate the effect to be d=.78. The intuition is that overestimates are more likely to be significant than underestimates, and so more likely to be published.

If we systematically overestimate effect sizes in original work, then we systematically overestimate the power of replications that assume those effects are real.

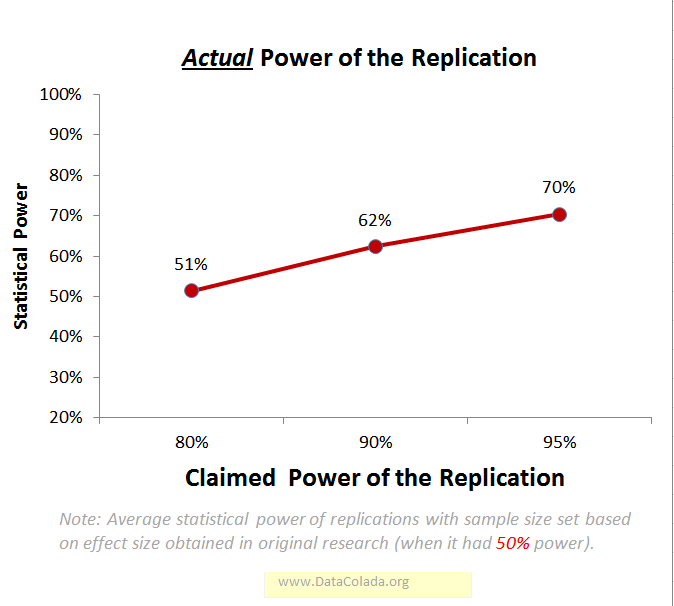

Let’s consider some scenarios. If original research were powered to 50%, a highly optimistic benchmark (Button et al, 2013; Sedlmeier & Gigerenzer, 1989), here is what it looks like:

So replications claiming 80% power actually have just 51% (Details | R code).

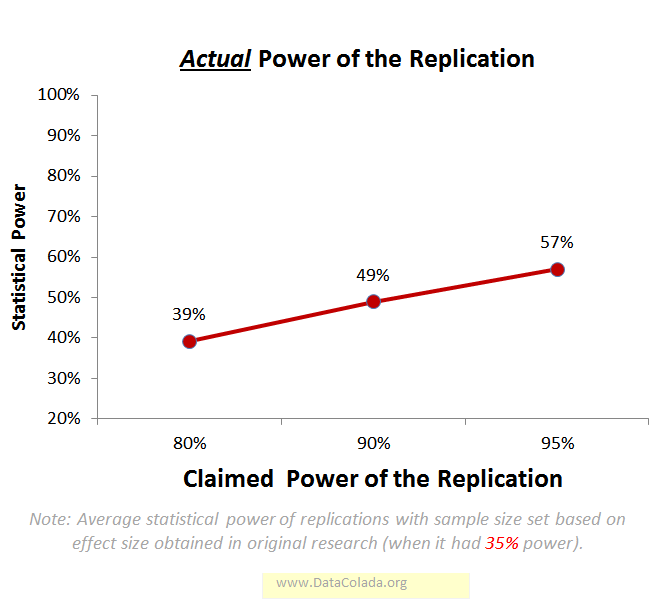

Ok. What if original research were powered at a more realistic level of, say, 35%:

The figures show that the extent of overclaiming depends on the power of the original study. Because nobody knows what that is, nobody knows how much power a replication claiming 80%, 90% or 95% really has.

A self-righteous counterargument

A replicator may say:

Well, if the original author underpowered her studies, then she is getting what she deserves when the replications fail; it is not my fault my replication is underpowered, it is hers. SHE SHOULD BE DOING POWER ANALYSIS!!!

Three problems.

1. Replications in particular and research in general are not about justice. We should strive to maximize learning, not schadenfreude.

2. The original researcher may have thought the effect was bigger than it is, she thought she had 80% power, but she had only 50%. It is not “fair” to "punish" her for not knowing the effect size she is studying. That's precisely why she is studying it.

3. Even if all original studies had 80% power, most published estimates would be over-estimates, and so even if all original studies had 80% power, most replications based on observed effects would overclaim power. For instance, one in five replications claiming 80% would actually have <50% power (R code).

What’s the alternative?

In a recent paper (“Evaluating Replication Results”) I put forward a different approach to thinking about replication results altogether. For a replication to fail it is not enough that p>.05 in it, we need to also conclude the effect is too small to have been detected in the original study (in effect, we need tight confidence intervals around 0). Underpowered replications will tend to fail to reject 0, be n.s., but will also tend to fail to reject big effects. In the new approach this result is considered as uninformative rather than as a "failure-to-replicate." The paper also derives a simple rule for sample size to be properly powered for obtaining informative failures to replicate: 2.5 times the original sample size ensures 80% power for that test. That number is unaffected by publication bias, how original authors power their studies, and the study design (e.g., two-proportions vs. ANOVA).