Most scientific studies document a pattern for which the authors provide an explanation. The job of readers and reviewers is to examine whether that pattern is better explained by alternative explanations.

When alternative explanations are offered, it is common for authors to acknowledge that although, yes, each study has potential confounds, no single alternative explanation can account for all studies. Only the author’s favored explanation can parsimoniously do so.

This is a rhetorically powerful line. Parsimony is a good thing, so arguments that include parsimony-claims feel like good arguments. Nevertheless, such arguments are actually kind of silly.

(Don't know the term Occam's Razor? It states that among competing hypotheses, the one with the fewest assumptions should be selected. Wikipedia )

Women are taller than men

A paper could read something like this:

While the lay intuition is that human males are taller than their female counterparts, in this article we show this perception is erroneous, referring to it as “malevation bias.”

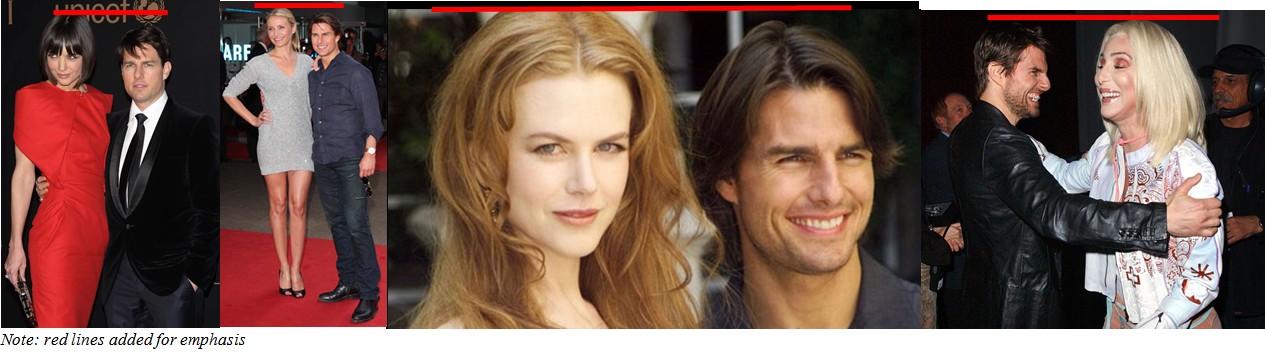

In Study 1, we found that (male) actor Tom Cruise is reliably shorter than his (female) partners.

In Study 2 we found that (female) elementary school teachers were much taller than their (mostly male) students.

In Study 3 we found that female basketball players are reliably taller than male referees.

The silly Occam’s razor argument

Across three studies we found that women were taller than men. Although each study is imperfect – for example, an astute reviewer suggested that age differences between teachers and students may explain Study 2, the only single explanation that’s consistent with the totality of the evidence is that women are in general indeed taller than men.

Parsimony favors different alternative explanations

One way to think of the misuse of parsimony to explain a set of studies is that the set is not representative of the world. The results were not randomly selected, they were chosen by the author to make a point.

Parsimony should be judged looking at all evidence, not only the selectively collected and selectively reported subset.

For instance, although the age confound with height is of limited explanatory value when we only consider Studies 1-3 (it only accounts for Study 2), it has great explanatory power in general. Age accounts for most of the variation in height we see in the world.

If three alternative explanations are needed to explain a paper, but each of those explanations accounts for a lot more evidence in the world than the novel explanation proposed by the author to explain her three studies, Occam's razor should be used to shave off the single new narrow theory, rather than the three existing general theories.

How to deal with alternative explanations then?

Conceptual replications help examine the generalizability of a finding. As the examples above show, they do not help assess if a confound is responsible for a finding, because we can have a different confound in each conceptual replication. [1]

Three ways to deal with concerns that Confound A accounts for Study X:

1) Test additional predictions Confound A makes for Study X.

2) Run a new study designed to examine if Confound A is present in Study X.

3) Run a new study that’s just like Study X, lacking only Confound A.

Running an entirely different Study Y is not a solution for Study X. An entirely different Study Y says “Given the identified confounds with Study X we have decided to give up and start from scratch with Study Y”. And Study Y better be able to stand on its own.

![]()