Recent past and present

The leading empirical psychology journal, Psychological Science, will begin requiring authors to disclose flexibility in data collection and analysis starting on January of 2014 (see editorial). The leading business school journal, Management Science, implemented a similar policy a few months ago.

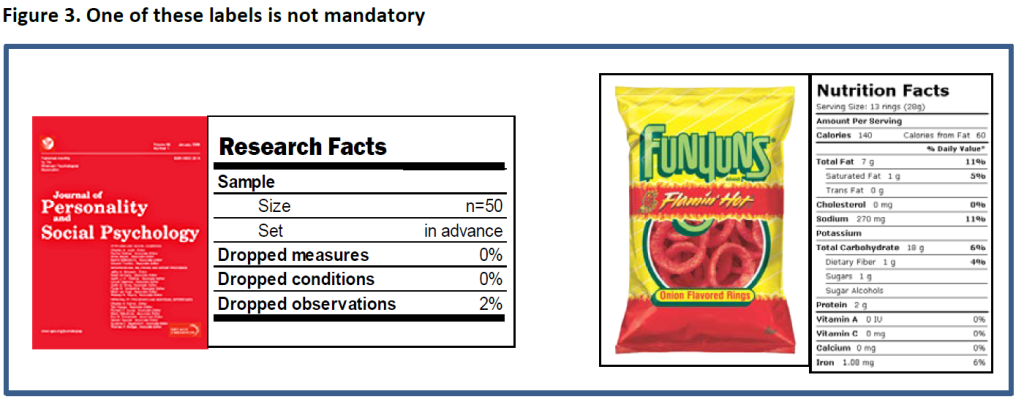

Both policies closely mirror the recommendations we made in our 21 Word Solution piece, where we contrasted the level of disclosure in science vs. food (see reprint of Figure 3).

Our proposed 21 word disclosure statement was:

We report how we determined our sample size, all data exclusions (if any), all manipulations, and all measures in the study.

Etienne Lebel tested an elegant and simple implementation in his PsychDisclosure project. Its success contributed to Psych Science's decision to implement disclosure requirements.

Starting Now

When reviewing for journals other than Psych Science and Management Science, what could reviewers do?

On the one hand, as reviewers we simply cannot do our jobs if we do not know fully what happened in the study we are tasked with evaluating.

On the other hand, requiring disclosure from an individual article one is reviewing risks authors taking such requests personally (reviewers are doubting them) and risks revealing our identity as reviewers.

A solution is a uniform disclosure request that large numbers of reviewers request for every paper they review.

Together with Etienne Lebel, Don Moore, and Brian Nosek we created a standardized request that we and many others have already begun using in all of our reviews. We hope you will start using it too. With many reviewers including it in their referee reports, the community norms will change:

I request that the authors add a statement to the paper confirming whether, for all experiments, they have reported all measures, conditions, data exclusions, and how they determined their sample sizes. The authors should, of course, add any additional text to ensure the statement is accurate. This is the standard reviewer disclosure request endorsed by the Center for Open Science [see http://osf.io/project/hadz3]. I include it in every review.