I want to comment on a recent article in the New York Times, but along the way I will comment on scientific reporting as well. I think that science reporters frequently fall short in assessing the evidence behind the claims they relay, but as I try to show, assessing evidence is not an easy task. I don’t want scientists to stop studying cool topics, and I don’t want journalists to stop reporting cool findings, but I will suggest that they should make it commonplace to get input from uncool data scientists and statisticians.

Science journalism is hard. Those journalists need to maintain a high level of expertise in a wide range of domains while being truly exceptional at translating that content in ways that are clear, sensible, and accurate. For example, it is possible that Ed Yong couldn’t run my experiments, but I certainly couldn’t write his articles. [1]

I was reminded about the challenges of science journalism when reading an article about the health benefits of being a volunteer. The journalist, Nicole Karlis, seamlessly connects interviews with recent victims, interviews with famous researchers, and personal anecdotes.

It also cites some evidence in the form of three scientific findings. Like the journalist, I am not an expert in this area. The journalist’s profession requires her to float above the ugly complexities of the data, whereas my career is spent living amongst (and contributing to) those complexities. So I decided to look at those three papers.

OK, here are those references (the first two come together):

If you would like to see those articles for yourself, they can be found here (.html) and here (.html).

If you would like to see those articles for yourself, they can be found here (.html) and here (.html).

First the blood pressure finding. The original researchers analyze data from a longitudinal panel of 6,734 people who provided information about their volunteering and had their blood pressure measured. After adding a number of control variables [2], they look to see if volunteering has an influence on blood pressure. OK, how would you do that? 40.4% of respondents reported some volunteering. Perhaps they could be compared to the remaining 59.6%? Or perhaps there is a way to look at how the number of hours volunteered decreases units of blood pressure? The point is, there are a few ways to think about this. The authors found a difference only when comparing non-volunteers to the category of people who volunteered 200 hours or more. Their report:

“In a regression including the covariates, hours of volunteer work were related to hypertension risk (Figure 1). Those who had volunteered at least 200 hours in the past 12 months were less likely to develop hypertension than non-volunteers (OR=0.60; 95% CI:0.40–0.90). There was also a decrease in hypertension risk among those who volunteered 100–199 hours; however, this estimate was not statistically reliable (OR=0.78; 95% CI=0.48–1.27). Those who volunteered 1–49 and 50–99 hours had hypertension risk similar to that of non-volunteers (OR=0.95; 95% CI: 0.68–1.33 and OR=0.96; 95% CI: 0.65–1.41, respectively).”

So what I see is some evidence that is somewhat suggestive of the claim, but it is not overly strong. The 200-hour cut-off is arbitrary, and the effect is not obviously robust to other specifications. I am worried that we are seeing researchers choosing their favorite specification rather than the best specification. So, suggestive perhaps, but I wouldn’t be ready to cite this as evidence that volunteering is related to improved blood pressure.

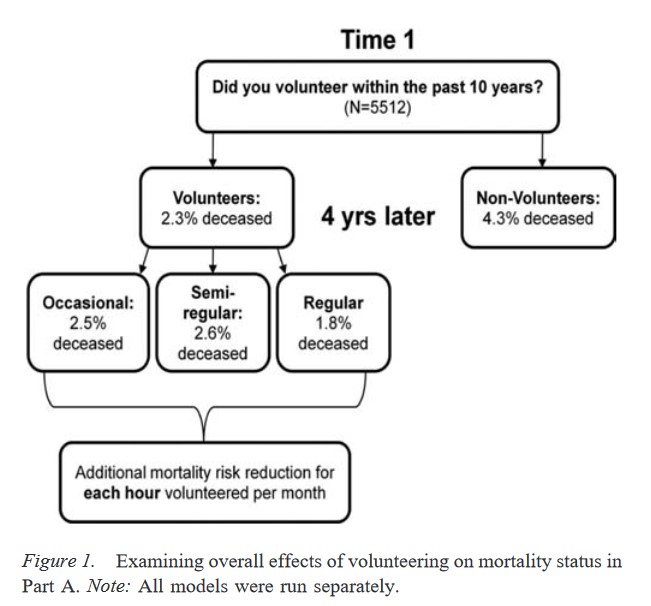

The second finding is “volunteering is linked to… decreased mortality rates.” That paper analyzes data from a different panel of 10,317 people who report their volunteer behavior and whose deaths are recorded. Those researchers convey their finding in the following figure:

So first, that is an enormous effect. People who volunteered were about 50% less likely to die within four years. Taken at face value, that would suggest an effect seemingly on the order of normal person versus smoker + drives without a seatbelt + crocodile-wrangler-hobbyist. But recall that this is observational data and not an experiment, so we need to be worried about confounds. For example, perhaps the soon-to-be-deceased also lack the health to be volunteers? The original authors have that concern too, so they add some controls. How did that go?

That is not particularly strong evidence. The effects are still directionally right, and many statisticians would caution against focusing on p-values… but still, that is not overly compelling. I am not persuaded. [3]

What about the third paper referenced?

That one can be found here (.html).

Unlike the first two papers, that is not a link to a particular result, but rather to a preregistration. Readers of this blog are probably familiar, but preregistrations are the time-stamped analysis plans of researchers from before they ever collect any data. Preregistrations – in combination with experimentation – eliminate some of the concerns about selective reporting that inevitably follow other studies. We are huge fans of preregistration (.html, .html, .html). So I went and found the preregistered primary outcome on page 8:

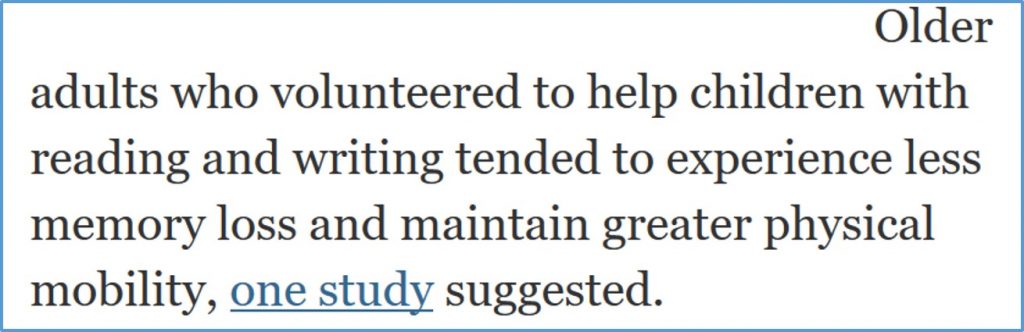

Perfect. That outcome is (essentially) one of those mentioned in the NY Times. But things got more difficult for me at that point. This intervention was an enormous undertaking, with many measures collected over many years. Accordingly, though the primary outcome was specified here, a number of follow-up papers have investigated some of those alternative measures and analyses. In fact, the authors anticipate some of that by saying “rather than adjust p-values for multiple comparison, p-values will be interpreted as descriptive statistics of the evidence, and not as absolute indicators for a positive or negative result.” (p. 13). So they are saying that, outside of the mobility finding, p-values shouldn’t be taken quite at face value. This project has led to some published papers looking at the influence of the volunteerism intervention on school climate, Stroop performance, and hippocampal volume, amongst others. But the primary outcome – mobility – appears to be reported here (.html). [4]. What do they find?

Well, we have the multiple comparison concern again – whatever difference exists is only found at 24 months, but mobility has been measured every four months up until then. Also, this is only for women, whereas the original preregistration made no such specification. What happened to the men? The authors say, “Over a 24-month period, women, but not men, in the intervention showed increased walking activity compared to their sex-matched control groups.” So the primary outcome appears not to have been supported. Nevertheless, making interpretation a little challenging, the authors also say, “the results of this study indicate that a community-based intervention that naturally integrates activity in urban areas may effectively increase physical activity.” Indeed, it may, but it also may not. These data are not sufficient for us to make that distinction.

That’s it. I see three findings, all of which are intriguing to consider, but none of which are particularly persuasive. The journalist, who presumably has been unable to read all of the original sources, is reduced to reporting their claims. The readers, who are even more removed, take the journalist’s claims at face value: “if I volunteer then I will walk around better, lower my blood pressure, and live longer. Sweet.”

I think that we should expect a little more from science reporting. It might be too much for every journalist to dig up every link, but perhaps they should develop a norm of collecting feedback from those people who are informed enough to consider the evidence, but far enough outside the research area to lack any investment in a particular claim. There are lots of highly competent commentators ready to evaluate evidence independent of the substantive area itself.

There are frequent calls for journalists to turn away from the surprising and uncertain in favor of the staid and uncontroversial. I disagree – surprising stories are fun to read. I just think that journalists should add an extra level of scrutiny to ensure that we know that the fun stories are also true stories.

![]()

Author Feedback.

I shared a draft of this post with the contact author for each of the four papers I mention, as well as the journalist who had written about them. I heard back from one, Sara Konrath, who had some helpful suggestions including a reference to a meta-analysis (.html) on the topic.

Footnotes.

- Obviously Mr. Yong could run my experiments better than me also, but I wanted to make a point. At least I can still teach college students better than him though. Just kidding, he would also be better at that. [↩]

- average systolic blood pressure (continuous), average diastolic blood pressure (continuous), age (continuous), sex, self-reported race (Non-Hispanic White, Non-Hispanic Black, Hispanic, Non-Hispanic Other), education (less than high school, General Equivalency Diploma [GED], high school diploma, some college, college and above), marital status (married, annulled, never married, divorced, separated, widowed), employment status (employed/not employed), and self-reported history of diabetes (yes/no), cancer (yes/no), heart problems (yes/no), stroke (yes/no), or lung problems (yes/no [↩]

- It is worth noting that this paper, in particular, goes on to consider the evidence in other interesting ways. I highlight this portion because it was the fact being cited in the NYT article. [↩]

- I think. It is really hard for me, as a novice in this area, to know if I have found all of the published findings from this original preregistration. If there is a different mobility finding elsewhere I couldn’t find it, but I will correct this post if it gets pointed out to me. [↩]