I recently joined a large group of academics in co-authoring a paper looking at how political science, economics, and psychology are working to increase transparency in scientific publications. Psychology is leading, by the way.

Working on that paper (and the figure below) actually changed my mind about something. A couple of years ago, when Joe, Uri, and I wrote False Positive Psychology, we were not really advocates of preregistration (a la clinicaltrials.gov). We saw it as an implausible superstructure of unspecified regulation. Now I am an advocate. What changed?

First, let me relate an anecdote originally told by Don Green (and related with more subtlety here). He described watching a research presentation that at one point emphasized a subtle three-way interaction. Don asked, “did you preregister that hypothesis?” and the speaker said “yes.” Don, as he relates it, was amazed. Here was this super complicated pattern of results, but it had all been predicted ahead of time. That is convincing. Then the speaker said, “No. Just kidding.” Don was less amazed.

The gap between those two reactions is the reason I am trying to start preregistering my experiments. I want people to be amazed.

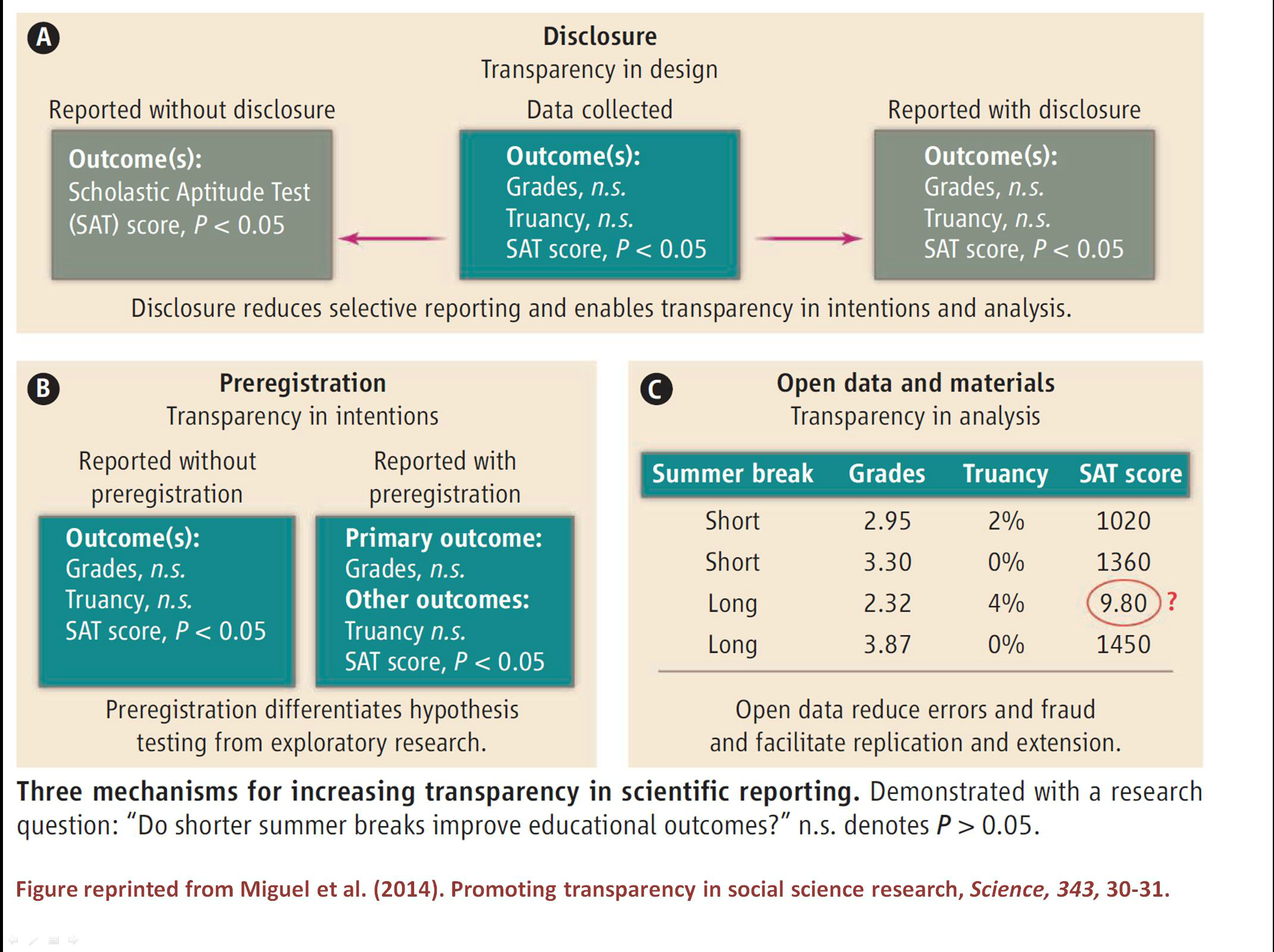

The single most important scientific practice that Uri, Joe, and I have emphasized is disclosure (i.e., the top panel in the figure). Transparently disclose all manipulations, measures, exclusions, and sample size specification. We have been at least mildly persuasive, as a number of journals (e.g., Psychological Science, Management Science) are requiring such reporting.

Meanwhile, as a researcher, transparency creates a rhetorical problem. When I conduct experiments, for example, I typically collect a single measure that I see as the central test of my hypothesis. But, like any curious scientist, I sometimes measure some other stuff in case I can learn a bit more about what is happening. If I report everything, then my confirmatory measure is hard to distinguish from my exploratory measures. As outlined in the figure above, a reader might reasonably think, “Leif is p-hacking.” My only defense is to say, “no, that first measure was the critical one. These other ones were bonus.” When I read things like that I am often imperfectly convinced.

How can Leif the researcher be more convincing to Leif the reader? By saying something like, “The reason you can tell that the first measure was the critical one is because I said that publicly before I ran the study. Here, go take a look. I preregistered it.” (i.e., the left panel of the figure).

Note that this line of thinking is not even vaguely self-righteous. It isn’t pushy. I am not saying, “you have to preregister or else!” Heck, I am not even saying that you should; I am saying that I should. In a world of transparent reporting, I choose preregistration as a way to selfishly show off that I predicted the outcome of my study. I choose to preregister in the hopes that one day someone like Don Green will ask me, and that he will be amazed.

I am new to preregistration, so I am going to be making lots of mistakes. I am not going to wait until I am perfect (it would be a long wait). If you want to join me in trying to add preregistration to your research process, it is easy to get started. Go here, and open an account, set up a page for your project, and when you’re ready, preregister your study. There is even a video to help you out.