You can’t un-ring a bell. Once people receive information, even if it is taken back, they cannot disregard it.

Teachers cannot imagine what novice students don’t know, juries cannot follow instructions to disregard evidence, negotiators cannot take the perspective of their counterpart who does not know what they know, etc. People exhibit “Outcome bias”, “hindsight bias”, “input bias”, “curse-of-knowledge”, etc.

The two of us, Berkeley Dietvorst (Booth – U of Chicago .htm) and Uri, just published a paper (.htm suggesting that, actually, people generally can ignore information; the issue is they don’t want to. Here it goes in about 500 words.

Studies 1 & 2: About 70% of people think information they "should" ignore is helpful

Participants completed a study based on the classic 1975 study by Fischhoff (.htm), where participants given the “correct” answer to a multiple-choice question over-estimated how many people would give that answer. We asked (paraphrasing) “Would using the information increase accuracy?”. About 70% of people thought using the correct answer would lead to greater accuracy. Same for Camerer et al 1989 (.htm) “curse-of-knowledge”, see footnote [1]. In other words, participants don't share the assumption made by the the experimenters, that they "should" ignore the information. They probably were not even trying to ignore it.

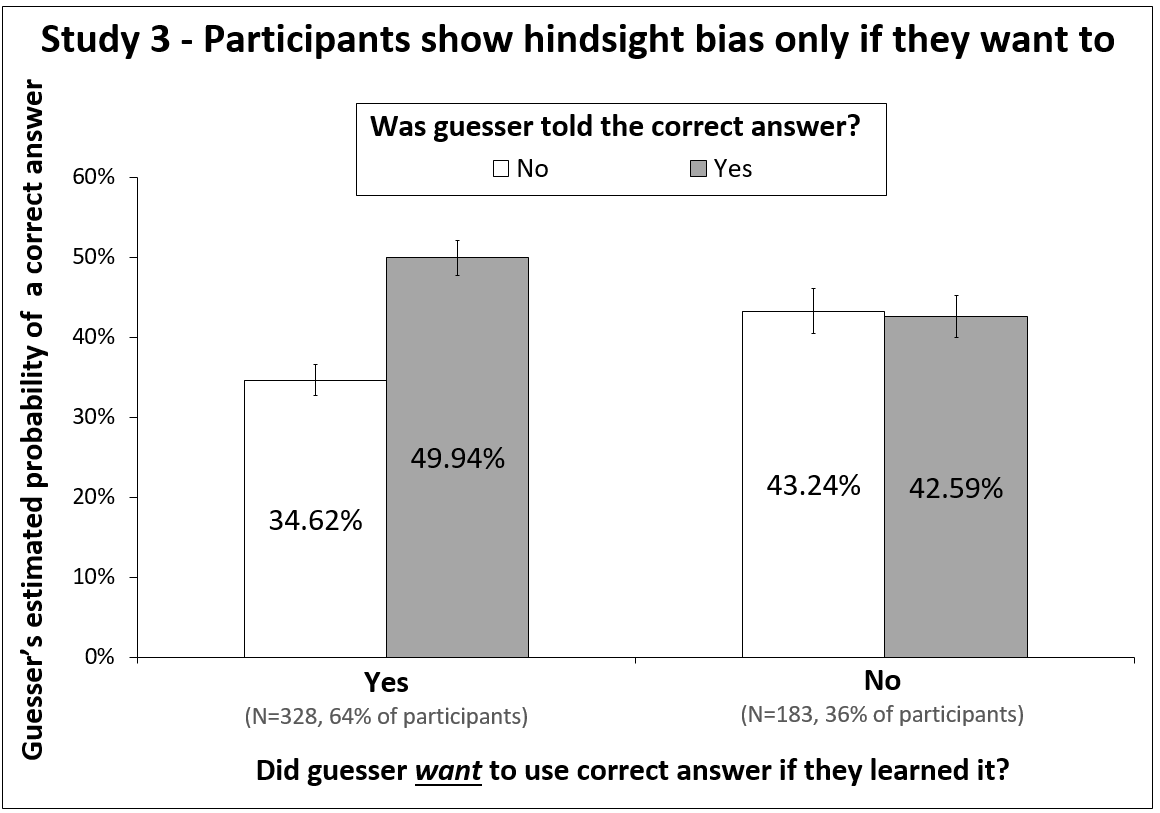

Study 3: People use information they "should" ignore, only if they want to

Here people predicted how another person would answer a multiple-choice question.

First, we asked participants (paraphrasing): “If we were to give you the correct answer, would you use it to estimate the other person’s response?” Then we randomly assigned participants to either learn or not learn the correct answer. We found that only participants who wanted to showed hindsight bias.

Figure 1. Guessed probability that respondents will answer correctly.

Figure 1. Guessed probability that respondents will answer correctly.

Error bars show 1 standard error above or below sample means.

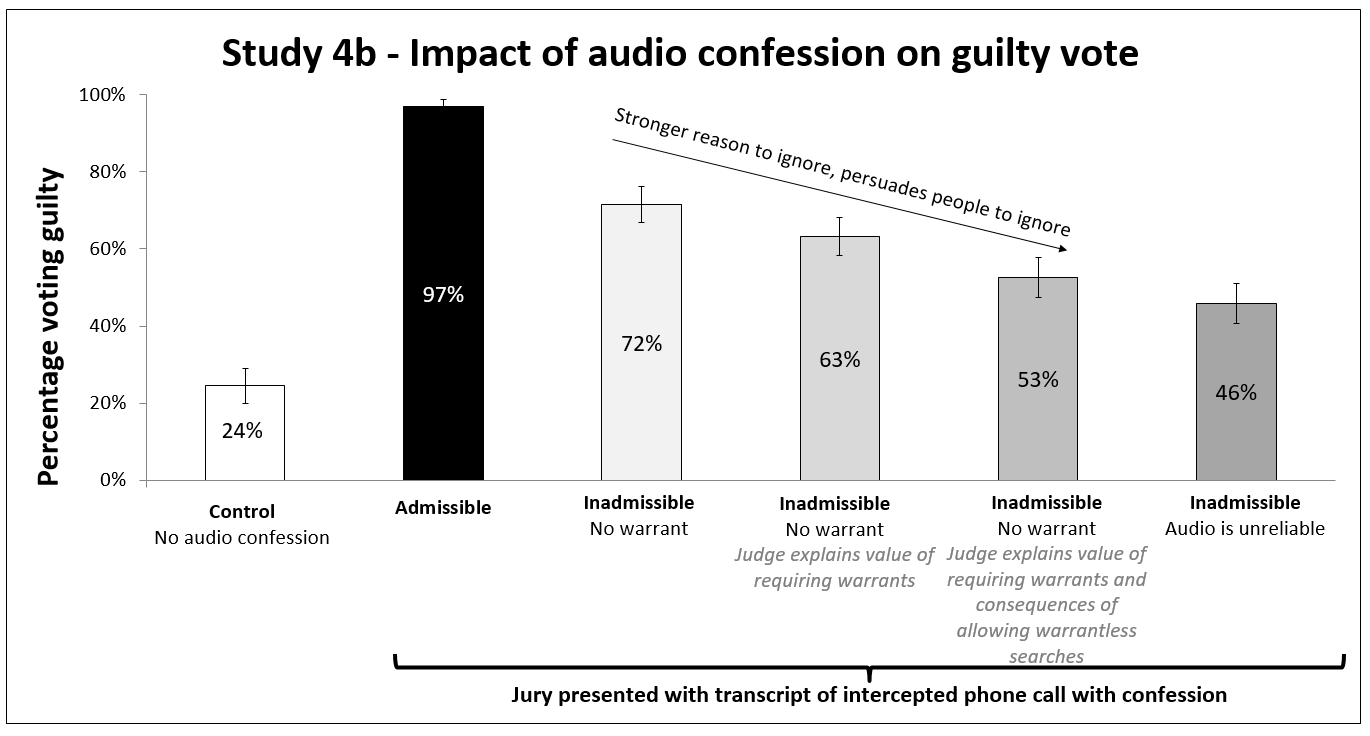

Study 4: Persuading the jury

One reason we were excited about this project is that one would often like to persuade others to act as if they did not know what they do know. E.g., getting jurors to follow instructions to disregard evidence.

If people pay attention to information because they cannot ignore it, then it’s game over; you have to live with hindsight bias, etc. On the other hand, if people use information because they choose to, then perhaps you can persuade them not to use the information.

Here we modified a “mock jury” paradigm.

A man is accused of killing his ex-wife and male neighbor. The evidence is weak, except for an intercepted phone call where the man confesses. The question is whether the judge’s instruction that jurors should ignore the phone call actually gets them to ignore it.

Kassin and Sommers (1997 .htm) showed that if the judge instructs jurors to ignore the call because it is unreliable (e.g. the recording is of poor quality and not understandable) then many people do, in fact, vote not-guilty. However, if the reason given is that the call was intercepted without a warrant, many more people vote guilty. First we replicated the finding and found it was 'mediated' by wanting to use information. Then, we asked: can you persuade people to ignore valid but illegally obtained information?

We found that when the judge explained the consequences of allowing warrantless searches (many) jurors were persuaded and guilty votes dropped .

Figure 3 (in paper). Participants (N= 571). Reason given by judge to ignore incriminating evidence and guilty votes.

Figure 3 (in paper). Participants (N= 571). Reason given by judge to ignore incriminating evidence and guilty votes.

Error bars show 1 standard error above/below each proportion

Punch line

People use information they "should" ignore intentionally, and it seems that they can often ignore it when they want to. So, we know you could ignore our findings and continue believing hindsight bias, outcome bias, input bias, etc, are unintentional, the question is: do you want to?

Our full forthcoming paper is here: .htm

![]()

Footnotes.

- In the “curse-of-knowledge” paradigm, students were told various companies earnings and were asked to make judgments that relied on forecasts of uninformed people’s earnings expectations. Camerer et al found that the students’ guesses were biased by the private information they had. We find that people believe that using this private information will increase accuracy, so presumably, they use this private information on purpose. [↩]